E Pluribus Unum

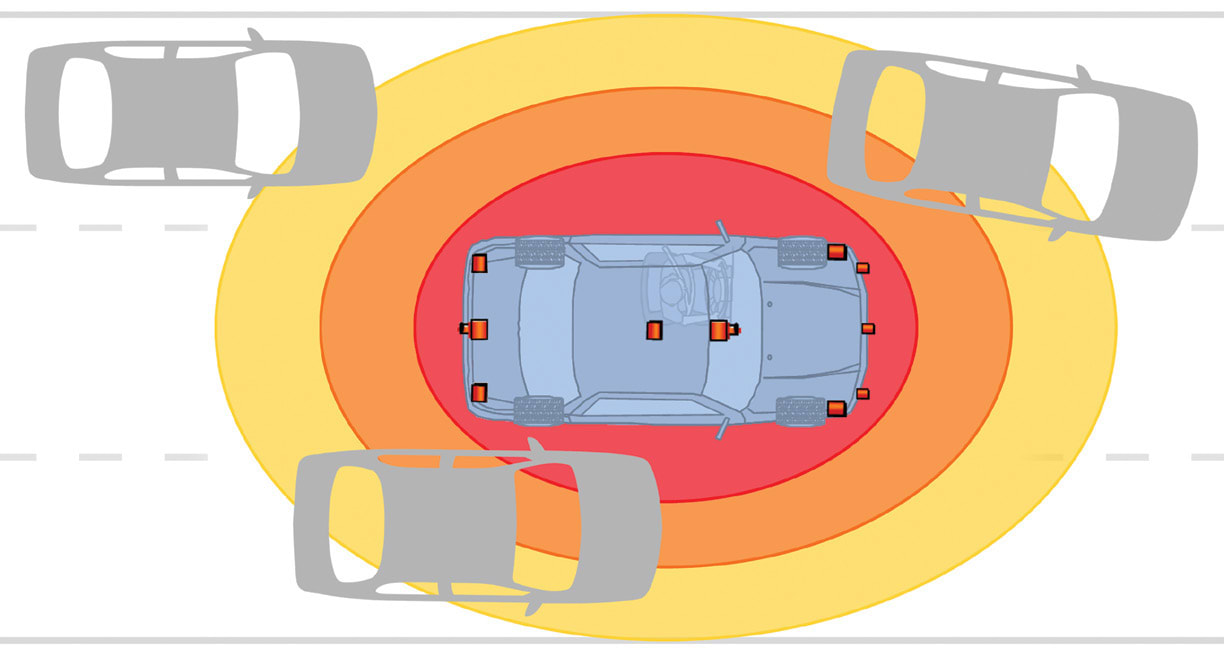

Inputs from many sensors are being combined to give safety systems a true vision of vehicle surroundings, with the resulting sensor fusion becoming a mainstay of autonomous vehicle electronics.

Inputs from radar, cameras, and other sensors that are already improving safety and convenience are being merged into holistic images that will pave the way for autonomous driving. When input from many sensors is combined, systems can more easily determine how to respond to ever-changing environments.

Sensors have strengths and weaknesses, so fusing inputs into a single image helps systems avoid false positives and better understand surrounding conditions. Design teams are exploring a range of architectural strategies to maximize the deployment of a growing number of sensors. While many system design tradeoffs are being examined, there’s general agreement that multiple inputs need to be combined to get the best results.

“As we progress to autonomous driving, we need to do more with sensor fusion,” said John Capp, Director, Global Vehicle Safety at General Motors. “As we fuse more and more information together, everything’s got to get much faster.”

More than human’s five senses

Advanced vehicles today often employ more than a dozen sensors for safety functions. That’s expected to grow going forward, which will require a dramatic increase in processing requirements.

“For automated driving, you could be fusing half a dozen radars, maybe as many as five cameras and possibly dozens of ultrasonic sensors for short-range sensing,” said Andy Whydell, Product Planning Manager at TRW Automotive. “Analyzing that is going to require very high computing capabilities. Some of the processing done in sensors or in the fusion ECU may come from non-traditional suppliers like Nvidia.”

Mainstream automotive chipmakers are providing powerful devices that are designed specifically for image processing. Automakers are quickly adopting chips with many microprocessor cores and long word lengths.

“The complexity of the latest generation of video processors makes cell-phone CPUs look like toys,” said Martin Duncan, Business Unit Director at STMicrolelectronics. “We just launched an eight-core machine. Four cores are 64-bit multi-threaded processors; the others are programmable accelerators. The chip can’t draw much power, just 3 W, because it sits high on the front windscreen.”

Though these systems use powerful chips and high-resolution sensors, cost is an important factor. Many OEMs want to deploy these systems on low-end vehicles often bought by younger buyers more interested in technology than in driving.

“We usually expect to see features start on premium cars, then OEMs ask for huge price cuts,” Duncan said. “That isn’t happening; carmakers want all the features on all the cars. Some are already selling vision systems as a $299 option.”

Going forward, more inputs will be added. Most developers feel that vehicles will eventually talk to each other using dedicated short range communications (DSRC). These data will help provide full 360-degree sensing that’s needed for autonomous driving.

“DSRC will be an important sensor input for 360-degree coverage,” said John Scally, Chief Engineer, Active Safety Technologies, Honda North America. “That will be fused with other inputs including GPS data.”

Increased customer expectations are also driving up the complexity of sensing and analysis. Safety systems are continuously being upgraded to spot more hazards. Pedestrian detection, which is becoming more common, is a complex task.

“Detecting a car is fairly easy compared to detecting a pedestrian,” Duncan said. “Pedestrians move unpredictably in many different direction and they come in all shapes and sizes, all of them smaller than cars.”

Cameras are also spotting traffic signs while also performing tasks including adaptive cruise control and lane-departure warning. It’s not surprising that OEMs feel improved sensors will be needed to handle all these tasks in real-time regardless of driving conditions.

“The sensors we have today are OK, but we need better sensors that are more robust in different weather conditions,” Capp said. “As we move toward automated systems where vehicles change lanes by themselves and drive in the city where there are pedestrians and bikes, we’ll need better sensors, better computing processors, and better algorithms.”

Centralizing intelligence

The growth of sensor counts is forcing design teams to examine architectures as they devise strategies that maximize efficiency while minimizing size and cost. When sensor data are fused into a single entity, architectures can be arranged in myriad configurations. As designs evolve, engineers are eliminating standalone boxes by integrating processors in controllers.

“Our first-generation blind-spot monitor had two radars and a separate electronic control unit,” said Scally. “We’ve gotten rid of the ECU, putting it in the left-side radar system and making the right side radar a slave.”

However, there are questions about how well that concept can scale. Some design teams feel that the most effective way to process sensor data is to send all related inputs to a single controller that fuses data and makes decision. This approach can help as more inputs are being linked into a single image.

“When you have forward-looking cameras and forward-looking radar, it’s effective to put the processor in radar,” said Sacha Heinrichs-Bartscher, Chief Engineer for Driver Assist Systems Engineering at TRW Automotive. “When a third sensor is added, the game changes. You usually need to go to a dedicated fusion unit.”

Engineers are divided on how much processing should be done in the sensor module. Filtering and analyzing data before they are sent reduces bandwidth demands and simplifies the task of a central controller that’s receiving multiple inputs.

But central controllers can build better images when they have more information, so many design teams don’t put very powerful microcontrollers in sensor modules. For example, GM’s Cadillac Super Cruise semi-autonomous system relies mainly on a central controller.

“There’s no clear answer to finding the right balance,” Capp said. “In a system like Super Cruise, there will be some level of processing done at the sensor, but it will be fairly minimal. We want to fuse data early in the process.”

Centralizing the intelligence requires a very powerful controller, but that may still be less expensive than putting processors in each of the modules. Distributed processing can get expensive as the number of sensors grows. Maintenance costs also impact this cost analysis.

“If you’ve got 12 sensors, it will be necessary to have centralized control,” said Lars Eggenstein, Project Manager, Vehicle Integrated Systems, at IAV. “If you need to replace a sensor, it’s helpful not to have a lot of intelligence integrated in the module.”

The number of sensors being monitored in vehicles is expected to grow as suppliers push to provide more autonomy. Safety sensors are not the only inputs that will have to be analyzed by vehicle controls. Drivers who rarely touch the steering wheel aren’t likely to closely monitor vehicle conditions.

“With automatic driver assists, much mundane information that a driver would normally check manually, like tire pressure and fluid levels, all need to be converted into computer readable data,” said Ian Chen, Applications, Software & Algorithms Manager for Freescale Sensor Solutions. “This will drive a significant increase in the need for sensors. It is plausible that the number of sensors in a car will exceed 200 in the not too distant future.”

Top Stories

INSIDERManufacturing & Prototyping

![]() How Airbus is Using w-DED to 3D Print Larger Titanium Airplane Parts

How Airbus is Using w-DED to 3D Print Larger Titanium Airplane Parts

INSIDERManned Systems

![]() FAA to Replace Aging Network of Ground-Based Radars

FAA to Replace Aging Network of Ground-Based Radars

NewsTransportation

![]() CES 2026: Bosch is Ready to Bring AI to Your (Likely ICE-powered) Vehicle

CES 2026: Bosch is Ready to Bring AI to Your (Likely ICE-powered) Vehicle

NewsSoftware

![]() Accelerating Down the Road to Autonomy

Accelerating Down the Road to Autonomy

EditorialDesign

![]() DarkSky One Wants to Make the World a Darker Place

DarkSky One Wants to Make the World a Darker Place

INSIDERMaterials

![]() Can This Self-Healing Composite Make Airplane and Spacecraft Components Last...

Can This Self-Healing Composite Make Airplane and Spacecraft Components Last...

Webcasts

Defense

![]() How Sift's Unified Observability Platform Accelerates Drone Innovation

How Sift's Unified Observability Platform Accelerates Drone Innovation

Automotive

![]() E/E Architecture Redefined: Building Smarter, Safer, and Scalable...

E/E Architecture Redefined: Building Smarter, Safer, and Scalable...

Power

![]() Hydrogen Engines Are Heating Up for Heavy Duty

Hydrogen Engines Are Heating Up for Heavy Duty

Electronics & Computers

![]() Advantages of Smart Power Distribution Unit Design for Automotive...

Advantages of Smart Power Distribution Unit Design for Automotive...

Unmanned Systems

![]() Quiet, Please: NVH Improvement Opportunities in the Early Design...

Quiet, Please: NVH Improvement Opportunities in the Early Design...