End Public ‘Shadow’ Driving!

The best way to test and train AI for autonomous vehicles is through proper simulation, systems engineering, and an end-state scenario matrix. A veteran engineer explains why the current AV testing paradigm must change.

Autonomous vehicle developers are widely using public “shadow” driving which involves a human in the driver’s seat letting go of the steering wheel and ceding control to the system under test to observe how it performs. The fundamental premise of this process is that the vehicle has learned the proper management of possible events which may occur during the maneuvers, and the human observer can react fast enough to stop any negative results from occurring.

It is a myth that public shadow driving is the best or only solution to create a fully autonomous vehicle, for several reasons.

To complete such an effort would require each AV maker to accumulate roughly one trillion miles in driving and redriving all the potential scenarios. The estimated cost of such programs is over $300 billion [based on the author’s conservative calculation of 234,000 vehicles operating at an average of 50 mph, every day all day for 10 years, to arrive at one trillion miles].

Other problems with shadow driving involve safety, including the running of actual accident scenarios to train the AI and SAE Level 3/handover. The process of accident-scenario “training” has potential to cause thousands of accidents, injuries and casualties when efforts to train and test the AI move from the benign scenarios to more complex and dangerous ones. Thousands of accident scenarios will have to be driven multiple times on the public streets.

Then there’s the issue of vehicle-control handover. In critical driving scenarios — whether in a system under development using public shadow driving or an SAE Level 3 vehicle in use by the public, it is impossible to provide the driver with a sufficient margin of time to regain situational awareness for safely executing effective vehicle control.

As we’ve seen in the aftermath of recent accidents, public shadow driving can weaken consumers’ support of AVs while bringing negative media coverage, increased regulations, endless litigation, and loss of investor trust. As a result, the industry could lose the opportunity to deliver true autonomous vehicles and thus save tens of thousands of lives and avoid hundreds of thousands of injuries.

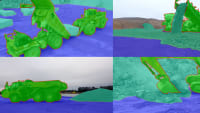

There is a solution, however. It is to replace most of the AV public shadow driving as the primary validation process with complete simulation. Such a solution would be designed from full systems engineering, driven by a requirements definition and design process, augmented by an end-state scenario matrix, and executed to the same level as the design of most complex state of the art simulations used by the military and aerospace.

A proposal for proper simulation

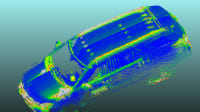

The systems currently in use by the auto industry are inadequate, and nowhere near aerospace complexity, or FAA Level D competency. They do not have proper real-time architectures. The models being used for vehicles, tires and roads, are not precise enough, especially in degraded conditions. The AI will appear to have learned properly, when in actuality it has not. This is often not discovered until analogous real-world scenarios are experienced—and in the process, expose critical timing and execution gaps.

Keep in mind that the benign scenarios being run now will not encounter these problems. It is not until you run complex or time-critical scenarios that the performance envelope of the vehicle, tire or road models is reached. That’s typically when the problems start.

Driver-in-the-loop (DiL) simulators without full motion systems can cause a significant level of false confidence. A motion system device used in manned simulators would be used for the simulated autonomous driving. Motion systems permit evaluation of motion sickness and passengers’ feeling of comfort and trust with the autonomous vehicle management.

In addition to having a proper motion system, simulation issues can be resolved by leveraging aerospace/DoD/FAA simulation technology, practices and test methodologies. Especially useful are those relating to DoD urban war games, which are directly analogous to complex driving scenarios, as well as those employing proper model and real-time fidelity.

Data methodology is key

Utilization of Agile processes, or a bottom-up engineering approach, is an inefficient if not debilitating process when it is employed in complex systems. Too much time is wasted time, by not developing components in parallel, as well as not defining and building to the most difficult scenarios up front. All of which require simulation to execute, including the immediate and endless repletion of these scenarios.

If the agile approach is taken the time lost will be extreme, and historically the less complex elements will be completed, leaving the more complex configurations for “later”. Also, flawed design assumptions will not likely be exposed until the most complex and difficult scenarios are encountered. That will usually drive a design and execution change that will need to be employed for many benign scenarios—with significant rework as a result.

Two popular and very flawed terms — “edge case” and “corner case” — are widely used today to describe accident scenarios. Accident scenarios are like any other scenario, but with outcomes that no one desires. A true edge or corner case is a scenario that should not happen in any possible scenario — such as asking a search engine to find an image of a cat, and then receiving the image of a garbage can. Engineers typically will not search out all the possible accident scenarios because they are deemed on the edges or corners of the core set. This then gives people an excuse to do the required due diligence.

The purpose of the simulation should be focused on presenting the AI stack the same digital representations it might experience with the same level of items to be discriminated, at the same input rate, with the same level of ambiguity to allow for determining where the AI stack has issues in making proper decision. The very hardest of these data sets to achieve have been called “edge” or “corner” cases; however these are the key cases which define success for the AI stacks decision process. Defining those cases, along with defining the desired results of those cases, requires a structured, recursive and manageable data methodology.

End-state Scenario Matrix

Beyond providing the scenario data to affect the systems engineering approach mentioned above is the need for all parties — including policy makers, validators, insurance companies and manufacturers — to know what “done” looks like as early as possible. The scenario set would indeed be comprehensive, with support for real time variations, which will confirm any AI perception errors. The effort to do this needs to be just as significant as the definition of the integration and systemic models for the simulation.

From legitimate geofencing to legitimate SAE Level 4 and 5, this test set would need to be informed from a multitude of data sources and domains. It would need to reflect the highest level of due diligence the global vehicle-development community can muster. It must ensure that the requisite levels of safety are attained and proven. Finally, it would have to be mapped to and synced with the simulation/simulator system noted above.

The industry will never save nearly the lives it hopes to with Level 4 vehicles, nor will it get close to having a true autonomous vehicle, until the current paradigm of AV testing is changed.

Top Stories

INSIDERRF & Microwave Electronics

![]() FAA to Replace Aging Network of Ground-Based Radars

FAA to Replace Aging Network of Ground-Based Radars

PodcastsDefense

![]() A New Additive Manufacturing Accelerator for the U.S. Navy in Guam

A New Additive Manufacturing Accelerator for the U.S. Navy in Guam

NewsSoftware

![]() Rewriting the Engineer’s Playbook: What OEMs Must Do to Spin the AI Flywheel

Rewriting the Engineer’s Playbook: What OEMs Must Do to Spin the AI Flywheel

Road ReadyPower

![]() 2026 Toyota RAV4 Review: All Hybrid, All the Time

2026 Toyota RAV4 Review: All Hybrid, All the Time

INSIDERDefense

![]() F-22 Pilot Controls Drone With Tablet

F-22 Pilot Controls Drone With Tablet

INSIDERRF & Microwave Electronics

![]() L3Harris Starts Low Rate Production Of New F-16 Viper Shield

L3Harris Starts Low Rate Production Of New F-16 Viper Shield

Webcasts

Automotive

![]() Hydrogen Engines Are Heating Up for Heavy Duty

Hydrogen Engines Are Heating Up for Heavy Duty

Power

![]() SAE Automotive Podcast: Solid-State Batteries

SAE Automotive Podcast: Solid-State Batteries

Automotive

![]() SAE Automotive Engineering Podcast: Additive Manufacturing

SAE Automotive Engineering Podcast: Additive Manufacturing

Aerospace

![]() A New Approach to Manufacturing Machine Connectivity for the Air Force

A New Approach to Manufacturing Machine Connectivity for the Air Force

Software

![]() Optimizing Production Processes with the Virtual Twin

Optimizing Production Processes with the Virtual Twin