AI Automates Drone Defense With High Energy Lasers

Lasers enable the U.S. Navy to fight at the speed of light. Armed with artificial intelligence (AI), ship defensive laser systems can make rapid, accurate targeting assessments necessary for today’s complex and fast-paced operating environment where drones have become an increasing threat.

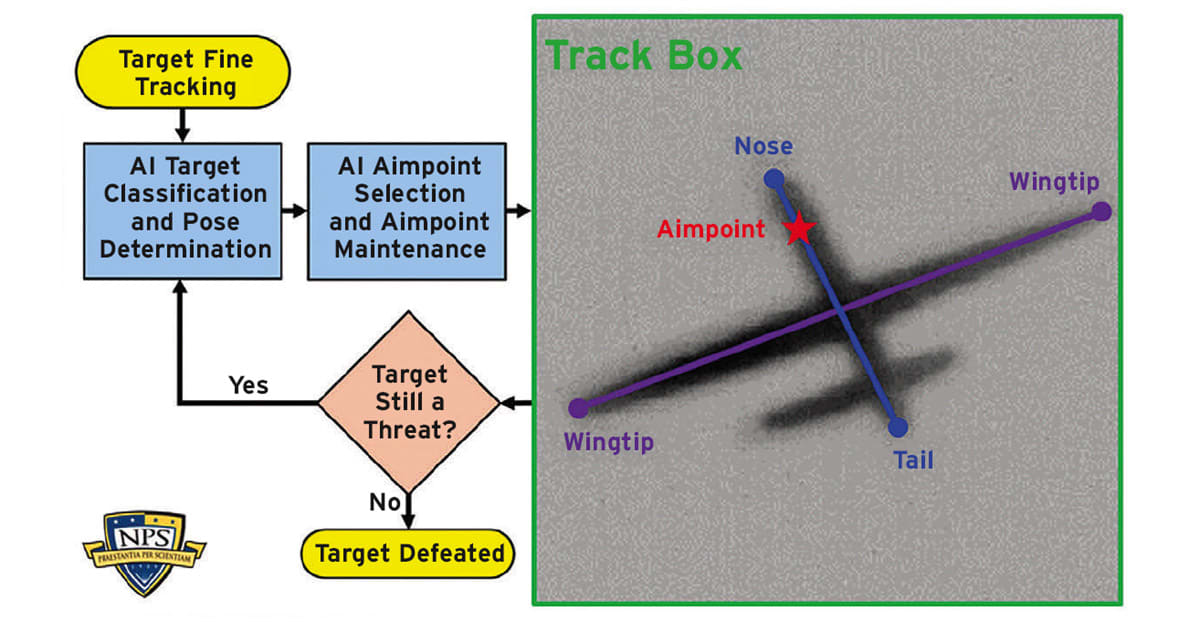

To counter the rapidly mounting threats posed by the proliferation of inexpensive uncrewed autonomous systems (UAS), or drones, Naval Postgraduate School (NPS) researchers and collaborators are applying AI to automate critical parts of the tracking system used by laser weapon systems (LWS). By improving target classification, pose estimation, aimpoint selection and aimpoint maintenance, the ability of an LWS to assess and neutralize a hostile UAS greatly increases. Enhanced decision advantage is the goal.

The tracking system of an LWS follows a sequence of demanding steps to successfully engage an adversarial UAS. When conducted by a human operator, the steps can be time consuming, especially when facing numerous drones in a swarm. Add in the challenges of an adversary’s missiles and rockets traveling at hypersonic speeds, efforts to mount proper defenses become even more complicated, and urgent.

Directed energy and AI are both considered DoD Critical Technology Areas. By automating and accelerating the sequence for targeting drones with an AI-enabled LWS, a research team from NPS, Naval Surface Warfare Center Dahlgren Division, Lockheed Martin, Boeing and the Air Force Research Laboratory (AFRL) developed an approach to have the operator on-the-loop overseeing the tracking system instead of in-the-loop manually controlling it.

“Defending against one drone isn’t a problem. But if there are multiple drones, then sending million-dollar interceptor missiles becomes a very expensive tradeoff because the drones are very cheap,” says Distinguished Professor Brij Agrawal, NPS Department of Mechanical and Aerospace Engineering, who leads the NPS team. “The Navy has several LWS being developed and tested. LWS are cheap to fire but expensive to build. But once it’s built, then it can keep on firing, like a few dollars per shot.”

To achieve this level of automation, the researchers generated two datasets that contained thousands of drone images and then applied AI training to the datasets. This produced an AI model that was validated in the laboratory and then transferred to Dahlgren for field testing with its LWS tracking system.

Funded by the Joint Directed Energy Transition Office (DE-JTO) and the Office of Naval Research (ONR), this research addresses advanced AI and directed energy technology applications cited in the CNO NAVPLAN.

Engaging Drones with Laser Weapon Systems

During a typical engagement with a hostile drone, radar makes the initial detection and then the contact information is fed over to the LWS. The operator of the LWS uses its infrared sensor, which has a wide field of view, to start tracking the drone. Next, the high magnification and narrow field of view of its high energy laser (HEL) telescope continues the tracking as its fast-steering mirrors maintain the lock on the drone.

With a video screen showing the image of the drone in the distance, the operator compares it to a target reference to classify the type of drone and identify its unique aimpoints. Each drone type has different characteristics, and its aimpoints are the locations where that particular drone is most vulnerable to incoming laser fire.

Along with the drone type and aimpoint determinations, the operator must identify the drone’s pose, or relative orientation to the LWS, necessary for locating its aimpoints. The operator looks at the drone’s image on the screen to determine where to point the LWS and then fires the laser beam.

Long distances and atmospheric conditions between the LWS and the drone can adversely affect the image quality, making all these identifications more challenging and time consuming to conduct.

After all these preparations, the operator cannot just simply move a computerized crosshair across the screen onto an aimpoint and press the fire button as if it were a kinetic weapon system, like an anti-aircraft gun or interceptor missile.

Though lasers move at the speed of light, they don’t instantaneously destroy a drone like the way lasers are depicted in sci-fi movies. The more powerful the laser, the more energy it delivers in a given time. To heat a drone enough to cause catastrophic damage, the laser must be firing the entire time.

But there’s a catch. The laser beam must be continually held at the same spot.

If the drone continuously moves, then the laser beam will wander along its surface if not continuously re-aimed. In this case, the laser’s energy will be distributed across a large area instead of concentrated at a single point. This process of continuously firing the laser beam at one spot is called aimpoint maintenance.

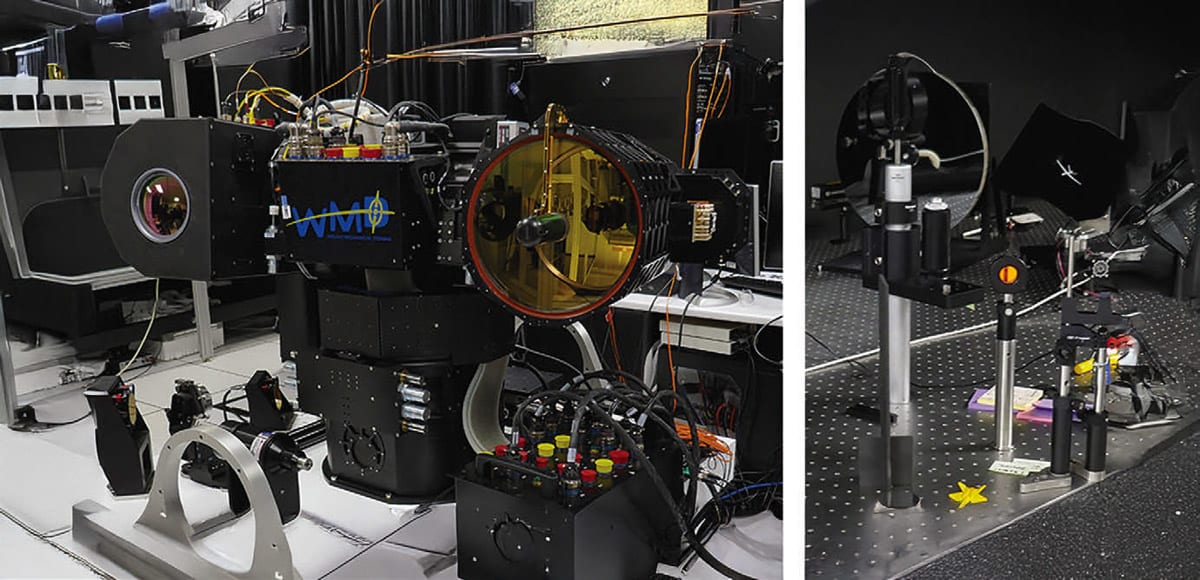

In 2016, construction of the High Energy Laser Beam Control Research Testbed (HBCRT) was completed by the NPS research team. The HBCRT was designed to replicate the functions of an LWS found aboard a ship, such as the 30-kilowatt, XN-1 Laser Weapon System operated on USS Ponce (LPD 15) from 2014 to 2017.

Now the HBCRT is also being used to create catalogs of drone images to make real-world datasets for AI training.

Built by Boeing, the HBCRT has a 30 cm diameter, fine-tracking, HEL telescope and a course-tracking, mid-wavelength infrared (MWIR) sensor. The pair is called the beam director when coupled together on a large gimble that swivels them in unison up-and-down and side-to-side.

“The MWIR is thermal,” says Research Associate Professor Jae Jun Kim, NPS Department of Mechanical and Aerospace Engineering, who specializes in optical beam control. “It looks at the mid-wavelength infrared signal of light, which is related to the heat signature of the target. It has a wide field of view. The gimbal moves to lock onto the target. Then the target is seen through the telescope, which has very small field of view.”

A 1-kilowatt laser beam (roughly a million times more powerful than a classroom laser pointer) can fire from the telescope. If the laser beam were to be used, it’s generated by a separate external unit and then directed into the telescope, which then projects the laser beam onto the target. However, its use with the HBCRT isn’t required for the initial development of this research, which allows the work to be easily conducted inside a laboratory.

With a short-wavelength infrared (SWIR) tracking camera, the telescope can record images of a drone that is miles away. Although necessary, replicating the view of a distant drone in a small laboratory is impossible. To resolve this dilemma, researchers mounted 3D-printed, titanium miniature models of drones fabricated by AFRL into a range-in-a-box (RIAB).

Constructed on an optical bench, the RIAB accurately replicates a drone flying miles away from the telescope by using a large parabolic mirror and other optical components. This research used a miniature model of a Reaper drone. When a SWIR image is taken of the drone model by the telescope, it appears to the telescope as if it were seeing an actual full-sized Reaper drone.

The drone model is attached to a gimble with motors that can change its pose along the three rotational flight axes of roll (x), pitch (y) and yaw (z). This allows the telescope to observe real-time changes in the direction that the drone model faces.

Simply put, pose is the orientation of the drone that the telescope “sees” in its direct line of sight. Is the drone heading straight-on or flying away, diving or climbing, banking or cruising straight and level, or moving in some other way?

By measuring the angles about the x-, y- and z-axes for a drone model in a specific orientation, the pose of the drone can be precisely defined and recorded.

This article was written by Dan Linehan for the Naval Postgraduate School. It has been edited. To learn more about the AI models and data sets used in this research, and to read the full article, visit here .

Top Stories

INSIDERRF & Microwave Electronics

![]() FAA to Replace Aging Network of Ground-Based Radars

FAA to Replace Aging Network of Ground-Based Radars

PodcastsDefense

![]() A New Additive Manufacturing Accelerator for the U.S. Navy in Guam

A New Additive Manufacturing Accelerator for the U.S. Navy in Guam

NewsSoftware

![]() Rewriting the Engineer’s Playbook: What OEMs Must Do to Spin the AI Flywheel

Rewriting the Engineer’s Playbook: What OEMs Must Do to Spin the AI Flywheel

Road ReadyPower

![]() 2026 Toyota RAV4 Review: All Hybrid, All the Time

2026 Toyota RAV4 Review: All Hybrid, All the Time

INSIDERDefense

![]() F-22 Pilot Controls Drone With Tablet

F-22 Pilot Controls Drone With Tablet

INSIDERRF & Microwave Electronics

![]() L3Harris Starts Low Rate Production Of New F-16 Viper Shield

L3Harris Starts Low Rate Production Of New F-16 Viper Shield

Webcasts

Energy

![]() Hydrogen Engines Are Heating Up for Heavy Duty

Hydrogen Engines Are Heating Up for Heavy Duty

Energy

![]() SAE Automotive Podcast: Solid-State Batteries

SAE Automotive Podcast: Solid-State Batteries

Power

![]() SAE Automotive Engineering Podcast: Additive Manufacturing

SAE Automotive Engineering Podcast: Additive Manufacturing

Aerospace

![]() A New Approach to Manufacturing Machine Connectivity for the Air Force

A New Approach to Manufacturing Machine Connectivity for the Air Force

Software

![]() Optimizing Production Processes with the Virtual Twin

Optimizing Production Processes with the Virtual Twin