Some Advances in Digital-Image Forensics

Image data are analyzed for clues to source cameras, authenticity, and integrity.

A program of research in the forensic analysis of digital images has yielded several proposed techniques for automated image-data processing to answer questions concerning the source, authenticity, and integrity of a given image or set of images. The need for such techniques arises because the ease with which digital images can be created and altered without leaving obvious traces can give rise to doubts about their credibility, especially when they are used as legal evidence. Like other proposed techniques of image forensics, the techniques reported here are subject to limitations. Because none of the techniques by itself offers a definitive solution to the digital-image-verification problem, the research continues in an effort to propose new techniques and combine them with existing techniques to obtain more reliable decisions.

The techniques now proposed can be broadly categorized as addressing three primary concerns: (1) identification of source cameras, (2) detection of synthetic images, and (3) detection of images that have been forged or altered. The techniques and the research pertinent thereto are summarized as follows:

Identifying Source-Camera Models via Image Features

One approach to identification of the camera model that is the source of a given image or set of images is inspired by the success of universal steganalysis techniques. This approach involves analysis of a total of 34 image features to identify certain combinations of features characteristic of specific camera models. The features include color features, image-quality metrics, and wavelet-coefficient statistics. These features are used to construct multi-class classification algorithms. Experiments on several different sets of digital cameras of various models (including cameras in cellular telephones) resulted in identification accuracies ranging from 83 to 97 percent.

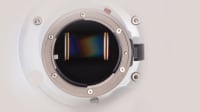

Identifying Source-Camera Models via Artifacts of Color-Filter Arrays and Artifacts of Demosaicking

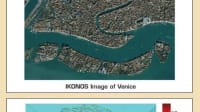

Typically, a digital camera includes a color-filter array (CFA) and subjects its image data to preprocessing that includes demosaicking, which is essentially a form of interpolation that, in effect, introduces a specific type of interdependency (correlations) between color values of pixels. The choice of CFA and the specifics of the demosaicking algorithm are the sources of some of the most pronounced differences among digital camera models. The interdependencies can be extracted from images to enable identification of specific demosaicking algorithms and, thus, of specific camera models that are the sources of the images. The interdependency-extraction process involves an algorithm that processes pixel values to estimate filter coefficients and periodicity features that are used as features in construction of classification algorithms to discriminate among source camera models. In experiments on four and five camera models, respectively, this approach resulted in correct identification of the source of an image in 86 and 76 percent, respectively, of the cases considered.

One technique for identifying the individual camera that is the source of a given image incorporates both (1) the above mentioned technique for CFA/demosaicking- based identification of the source camera model and (2) a previously reported technique for detecting pixelnonuniformity noise, which is a pattern noise, unique to each camera, arising from differences among the photosensitivities in individual pixels. The pixelnonuniformity- based technique sometimes yields false positive identifications; the CFA/demosaicking-based technique helps to reduce the false-positive rate.

Identifying Individual Source Cameras Using Sensor-Dust Patterns

In cameras equipped with removable lenses, dust can accumulate on the image sensors when the lenses are removed. Although the resulting dust specks on images are usually not visually significant, they are sometimes useful for identifying cameras. A proposed technique for detecting dust specks in images involves the use of match filtering and contour analysis to generate information that, in turn, is used to generate a camera dust reference pattern, a match of which one subsequently seeks in individual images. (It should be noted that the lack of a match does not necessarily indicate anything because an image sensor could have been cleaned of dust after generation of the reference pattern).

Identification of Synthetic Images

An approach to identification of synthetic images is based partly on the concept that selected statistical properties of pattern noise in images of real scenes acquired by digital cameras can be expected to differ from the corresponding statistical properties of pattern noise in synthetic images. These statistical properties, along with artifacts of demosaicking and image-quality metrics, are used as features to be processed by a classification algorithm. In tests of this approach on real and synthetic images, synthetic images were identified with an average accuracy of 93 percent.

Detection of Forgery or Alteration via Variations in Image Features

In this approach, one designates a set of features that are sensitive to image tampering and determines the ground truth for these features by analysis of original (unaltered) and tampered images. Subsequently, tampering is detected on the basis of the deviation of its measured features from the ground truth.

Detection of Forgery or Alteration via Inconsistences in Image Features

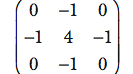

Image tampering often involves local adjustments of sharpness versus blurriness. Hence, the blurriness characteristics in tampered parts are expected to differ from those in non-tampered parts. Therefore, one approach to detection of tampering involves the use of regularity properties of wavelet-transform coefficients to identify local variations in sharpness and blurriness of edges, which variations could be indicative of tampering.

This work was done by Nasir Memon and Husrev T. Sencar of Polytechnic University, Brooklyn, for the Air Force Research Laboratory.

This Brief includes a Technical Support Package (TSP).

Some Advances in Digital-Image Forensics

(reference AFRL-0041) is currently available for download from the TSP library.

Don't have an account?

Overview

The document is a Final Technical Report detailing research on digital image forensics conducted by Nasir Memon and Husrev T. Sencar from Polytechnic University, covering the period from January 2005 to February 2007. The primary focus of the research is to develop methodologies for verifying the authenticity and integrity of digital images, which is increasingly important in contexts such as legal photographic evidence.

Digital images can be easily created, altered, and manipulated without leaving obvious traces, raising concerns about their credibility. The report categorizes the proposed techniques into three main areas: source camera identification, discrimination of synthetic images, and image forgery detection. These methodologies aim to provide reliable tools for forensic experts and law enforcement to assess the authenticity of digital images.

The report emphasizes the limitations of existing digital watermarking technologies, which often require specific imaging devices and may not be effective against all types of manipulation. In contrast, the forensic tools developed in this research are designed to identify the nature of manipulations and reconstruct the history of image processing operations. This capability is crucial for establishing the veracity of images used as evidence in legal contexts.

The authors discuss various image forensic techniques, including statistical analysis methods that can detect specific processing operations such as resampling, scaling, and brightness adjustments. These techniques can reveal correlations between different parts of an image, helping to identify alterations that may not be perceptible to the naked eye.

The report also highlights the importance of ensuring the credibility of digital images, particularly in legal settings where the authenticity of photographic evidence can significantly impact judicial outcomes. The methodologies developed in this research have implications for various fields, including law enforcement, journalism, and digital media.

In conclusion, the report presents significant advancements in the field of digital image forensics, offering new tools and techniques to verify the authenticity of digital images. The findings underscore the necessity of robust forensic methodologies in an era where digital manipulation is prevalent, ensuring that digital images can be trusted in critical applications.

Top Stories

INSIDERManufacturing & Prototyping

![]() How Airbus is Using w-DED to 3D Print Larger Titanium Airplane Parts

How Airbus is Using w-DED to 3D Print Larger Titanium Airplane Parts

NewsAutomotive

![]() Microvision Aquires Luminar, Plans Relationship Restoration, Multi-industry Push

Microvision Aquires Luminar, Plans Relationship Restoration, Multi-industry Push

INSIDERAerospace

![]() A Next Generation Helmet System for Navy Pilots

A Next Generation Helmet System for Navy Pilots

INSIDERDesign

![]() New Raytheon and Lockheed Martin Agreements Expand Missile Defense Production

New Raytheon and Lockheed Martin Agreements Expand Missile Defense Production

ArticlesAR/AI

![]() CES 2026: Bosch is Ready to Bring AI to Your (Likely ICE-powered) Vehicle

CES 2026: Bosch is Ready to Bring AI to Your (Likely ICE-powered) Vehicle

Road ReadyDesign

Webcasts

Semiconductors & ICs

![]() Advantages of Smart Power Distribution Unit Design for Automotive...

Advantages of Smart Power Distribution Unit Design for Automotive...

Unmanned Systems

![]() Quiet, Please: NVH Improvement Opportunities in the Early Design...

Quiet, Please: NVH Improvement Opportunities in the Early Design...

Electronics & Computers

![]() Cooling a New Generation of Aerospace and Defense Embedded...

Cooling a New Generation of Aerospace and Defense Embedded...

Power

![]() Battery Abuse Testing: Pushing to Failure

Battery Abuse Testing: Pushing to Failure

AR/AI

![]() A FREE Two-Day Event Dedicated to Connected Mobility

A FREE Two-Day Event Dedicated to Connected Mobility