The Purpose of Mixed-Effects Models in Test and Evaluation

The simplest version of a mixed model—the random intercept model, where so-called random effects represent group-wide deviations from a grand mean—can account for day-to-day deviations in system performance while still allowing the results to be generalized beyond the few days of observed testing.

Mixed effects models are the standard technique for analyzing data that exhibit some grouping structure. In defense testing, these models are useful because they allow accounting for correlations between observations, a feature common in many operational tests.

By design, operational test data are often noisy. Scenarios with real operators conducting realistic missions against a responsive opposing force generate data that reflect realistic combat environments and include operationally important sources of variance. The further one moves from lab experiments, the more uncontrollable circumstances and conditions will influence the numbers being reported.

One strategy for dealing with noisy data is simply to collect more of it. As the sample size n increases, uncertainty bars shrink, and the risk to the program is reduced. But this is an inefficient use of taxpayer dollars and, because test budgets are limited, often infeasible. An alternative is to do more with the available data.

Mixed effects models, or simply mixed models, are a well-studied statistical technique used regularly across diverse fields of research, including pharmacology, agriculture, image analysis, and biology. Mixed effects models are used in cases where researchers suspect the data contains systematically correlated errors. By properly accounting for these correlations, mixed effects models produce estimates with smaller uncertainties.

A canonical example from agriculture is a comparison of crop yields from different seeds planted in multiple fields. Each field is divided into some number of plots, one type of seed is planted in each plot, and at the end of the test, the yield of each plot is measured. Each field has unique characteristics, including exposure to sun, irrigation runoffs, and which plants are adjacent to the field. Since these characteristics affect crop yield, the attributes of each field introduce noise to the data.

Because the goal is to determine how different types of seeds will perform in the future, the unique properties of the fields used for the test are not relevant. By using a mixed effects model, the field-level variation can be estimated separately from random plot-to-plot variation. This makes it easier to make comparisons, narrows the confidence bounds around estimates of the average yield of each seed type, and reduces the chance of confusing random variation due to a particularly good or poor field with changes in yield due to seed type.

While Defense Test and Evaluation (T&E) is different from agriculture, data attributes can be surprisingly similar. Consider an evaluation of a radar-equipped unmanned aerial vehicle (UAV) operating in a maritime environment. The radar system provides maritime surveillance and intelligence by detecting targets at sea and reporting their locations to a host platform. The goal of the test is to compare the radar system’s target location error (TLE, the straightline distance between the true location of a target and the location provided by the radar system) against a requirement of 250 meters.

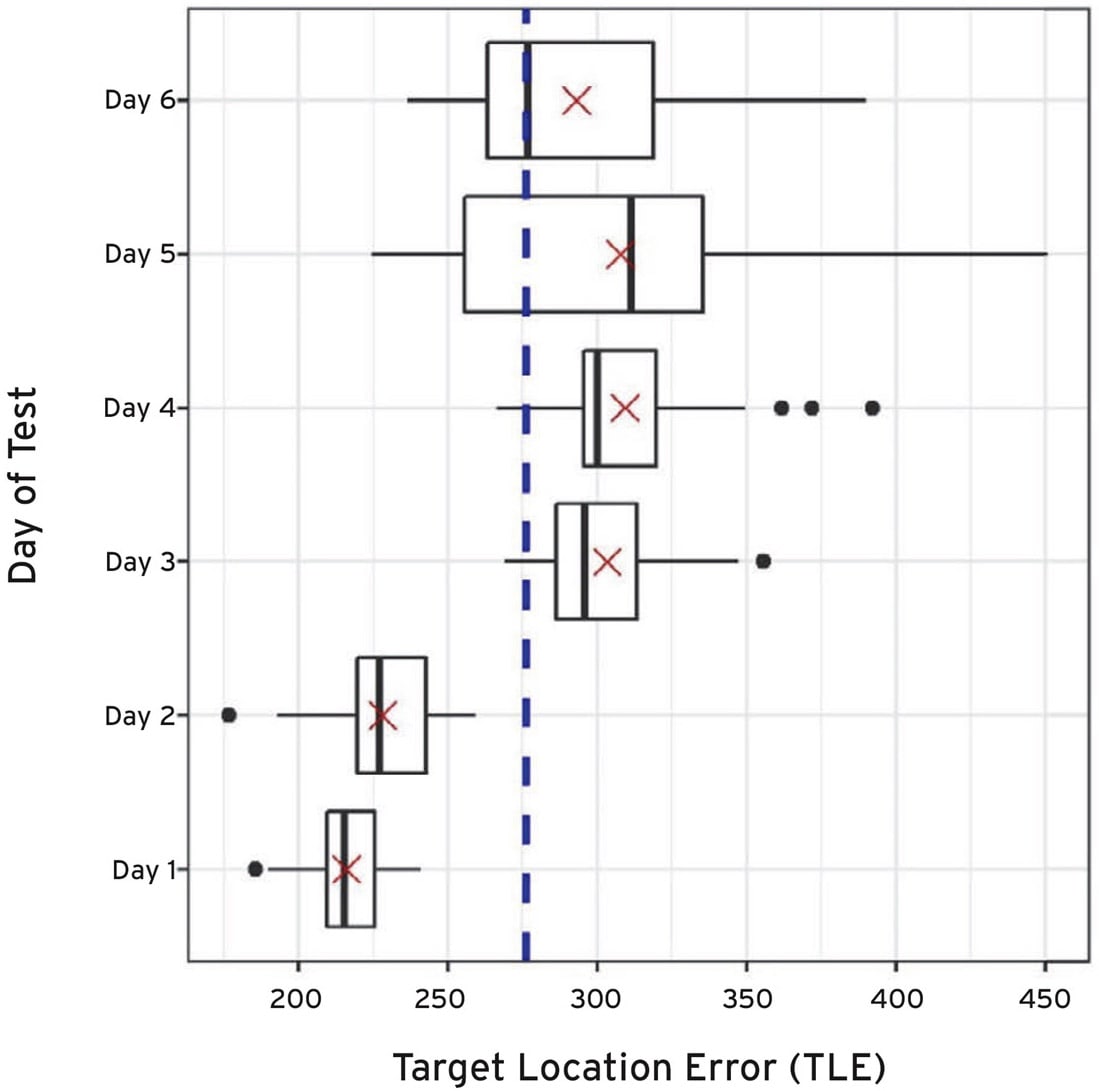

The test plan calls for collecting six days’ worth of data spread over the course of one month. The primary factor driving radar system performance is the distance between the UAV and the target. Therefore, the test requires that performance be measured along a range of distances to the target. Distance is analogous to seed type in the above example. Environmental factors (the state of the water over which the aircraft is flying, atmospheric conditions, etc.) will also affect radar performance. These will vary from day to day, meaning that TLE measurements will be correlated within each day. Day is analogous to the field factor in the agricultural example; that is, both factors partition the data into groups.

The accompanying figure shows the day-to-day variation of TLE. It is clear single days are grouped. For example, the conditions on days 1 and 2 seem to have provided the best set of environmental factors for the UAV’s radar system. Ignoring the day-to-day variability could cause wrong conclusions to be made about the system’s performance.

This work was done by Rebecca Medlin, John T. Haman, Matthew R. Avery, and Heather Wojton for the Institute for Defense Analyses. For more information, download the Technical Support Package(free white paper) below. IDA-0002

This Brief includes a Technical Support Package (TSP).

The Purpose of Mixed-Effects Models in Test and Evaluation

(reference IDA-0002) is currently available for download from the TSP library.

Don't have an account?

Overview

The document titled "The Purpose of Mixed-Effects Models in Test and Evaluation," authored by Rebecca Medlin, John T. Haman, Matthew R. Avery, and Heather Wojton, discusses the critical role of mixed-effects models in analyzing operational test data, particularly in the context of defense evaluations. It emphasizes the necessity of accounting for uncertainty in test data, which often contains statistical noise from various sources.

Operational tests are essential for assessing system performance, but the data collected can exhibit intra-group correlation, making traditional analysis methods inadequate. The authors advocate for the use of mixed models, specifically the random intercept model, which allows for the representation of group-wide deviations from a grand mean. This approach effectively captures day-to-day variations in system performance while enabling generalization beyond the limited days of testing.

The document highlights the capabilities of the R package ciTools, which facilitates the generation of uncertainty estimates such as confidence intervals, prediction intervals, and quantile estimates for mixed models. This tool is particularly useful for analysts who need to report uncertainty bounds that reflect either day-to-day variability or specific conditions on particular days. The flexibility of ciTools allows users to tailor their uncertainty estimates to the needs of their analysis.

The publication is a product of the Institute for Defense Analyses (IDA), a nonprofit organization that operates Federally Funded Research and Development Centers, focusing on addressing complex U.S. security and science policy questions through objective analysis. The work was conducted under contract for the Office of the Director, Operational Test and Evaluation, underscoring its relevance to defense operations.

In summary, the document serves as a guide for analysts in the defense sector, illustrating the importance of mixed-effects models in operational test evaluations. It provides insights into statistical methodologies that enhance the reliability of performance assessments, ultimately contributing to more informed decision-making in defense operations. The authors' expertise and the practical applications of the discussed models and tools make this publication a valuable resource for professionals involved in test science and evaluation.

Top Stories

INSIDERRF & Microwave Electronics

![]() FAA to Replace Aging Network of Ground-Based Radars

FAA to Replace Aging Network of Ground-Based Radars

PodcastsDefense

![]() A New Additive Manufacturing Accelerator for the U.S. Navy in Guam

A New Additive Manufacturing Accelerator for the U.S. Navy in Guam

NewsSoftware

![]() Rewriting the Engineer’s Playbook: What OEMs Must Do to Spin the AI Flywheel

Rewriting the Engineer’s Playbook: What OEMs Must Do to Spin the AI Flywheel

Road ReadyPower

![]() 2026 Toyota RAV4 Review: All Hybrid, All the Time

2026 Toyota RAV4 Review: All Hybrid, All the Time

INSIDERDefense

![]() F-22 Pilot Controls Drone With Tablet

F-22 Pilot Controls Drone With Tablet

INSIDERRF & Microwave Electronics

![]() L3Harris Starts Low Rate Production Of New F-16 Viper Shield

L3Harris Starts Low Rate Production Of New F-16 Viper Shield

Webcasts

Energy

![]() Hydrogen Engines Are Heating Up for Heavy Duty

Hydrogen Engines Are Heating Up for Heavy Duty

Energy

![]() SAE Automotive Podcast: Solid-State Batteries

SAE Automotive Podcast: Solid-State Batteries

Power

![]() SAE Automotive Engineering Podcast: Additive Manufacturing

SAE Automotive Engineering Podcast: Additive Manufacturing

Aerospace

![]() A New Approach to Manufacturing Machine Connectivity for the Air Force

A New Approach to Manufacturing Machine Connectivity for the Air Force

Software

![]() Optimizing Production Processes with the Virtual Twin

Optimizing Production Processes with the Virtual Twin