Agile Robust Autonomy: Inspired by Connecting Natural Flight and Biological Sensors

Understanding the mechanics of insect flight could help improve the agility, autonomy, robustness and integrated sensing and processing of unmanned aerial vehicles.

The goal of this research was to understand insect flight for purposes of improving agility, autonomy, robustness, and integrated sensing and processing of unmanned aerial vehicles. This goal was approached using a comparative methodology in order to understand general principles of insect flight across diverse species; understand environmental variables that impact natural flight of insects; understand how insects can recover from flight perturbations; and understand the connection between flight, sensor capability, neural processing, and muscular control.

Insects are existence proofs for agile, robust, autonomous flight that minimizes size, weight, and power requirements, aspects that are desirable for human-engineered systems. To learn design principles for improved sensors and guidance/control algorithms, AFRL studies insect sensors and flight. The current research effort attempts to connect the environmental information with insect flight and relate that to insect sensors and processing. The goal is to understand insect flight for purposes of improving agility, autonomy, robustness, and integrated sensing and processing of unmanned aerial vehicles.

Indoor laboratory and outdoor laboratory flights of insects were recorded by high-speed cameras with frame rates from 500–1000 Hz. Indoor laboratory flights were recorded in a flight chamber measuring 2m × 1m × 1m and lined with different optic flow patterns. Outdoor laboratory flights were recorded by releasing just captured insects in front of highspeed cameras and allowing them to initiate escape flight. The goal was to compare the kinematics of each flight inside the laboratory versus outside in the natural world.

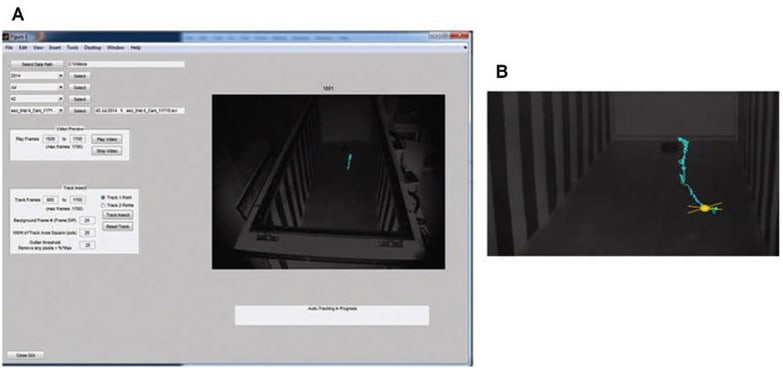

This effort required automating the tracking of the insect in the video frames because the dataset captured is extremely large. If using just two cameras recording at 1000 frames per second, the study cameras are capable of recording 8 seconds of data. The insect’s position would need to be found and recorded in 16000 frames for one behavior capture.

Work was started to automate this process based on Ty Hedrick’s algorithms (Hedrick, 2008). Figure 1 illustrates challenges in adapting these techniques indoors. Also shown in Figure 1 are early behavior recordings of Green Darner dragonflies (Anax junius) in this flight chamber. The goal was to establish repeatable protocols for eliciting flights in insects large enough to carry a telemetry recoding chip to correlate flight kinematics, responses to optic flow stimuli, and muscle potentials.

Outdoor flight recordings have their own challenges, as illustrated in Figure 2. It is also likely that the objective of capturing completely natural kinematics is not being reached, because the insects are manipulated beforehand. Future efforts will move towards completely natural conditions, capturing flight from insects that have not experienced any interference from the research team.

Gaze stabilization is also of interest, but, as can be seen in Figure 1, the head of the insect is not easily discernable in free flight. In addition, it would be difficult to induce precise behaviors to initiate a gaze stabilization response. Therefore, efforts were started toward characterizing gaze stabilization in insects while tethered. The stimulating is a rotating horizon line produced by UV and green LEDs.

This work was done by Jennifer Talley, PhD for the Air Force Research Laboratory. AFRL-0289

This Brief includes a Technical Support Package (TSP).

Agile Robust Autonomy: Inspired by Connecting Natural Flight and Biological Sensors

(reference AFRL-0289) is currently available for download from the TSP library.

Don't have an account?

Overview

The document titled "Agile Robust Autonomy: Inspired by Connecting Natural Flight and Biological Sensors," authored by Dr. Jennifer Talley and published by the Air Force Research Laboratory in March 2017, presents research aimed at enhancing the agility, autonomy, robustness, and integrated sensing and processing of unmanned aerial vehicles (UAVs) by drawing inspiration from insect flight.

The research, conducted under work unit W0PY from November 2013 to September 2016, employs a comparative methodology to understand the general principles of insect flight across various species. It focuses on several key areas: the environmental variables affecting natural flight, the mechanisms by which insects recover from flight perturbations, and the interplay between flight dynamics, sensor capabilities, neural processing, and muscular control.

The document outlines the significance of studying insect flight, as it can provide insights into improving UAV performance in complex environments. The research includes high-speed recordings of insect flight, particularly damselflies, to analyze their flight patterns and stabilization mechanisms. This analysis is crucial for developing UAVs that can navigate and adapt to dynamic conditions, similar to how insects do in nature.

The report also discusses challenges faced in auto-tracking insects and the importance of gaze stabilization, particularly in tethered damselflies. Additionally, it explores the electroretinography (ERG) of damselflies, which helps in understanding their visual processing capabilities, and examines the resolution of their compound eyes, which is vital for their flight navigation.

Overall, the document emphasizes the potential of biomimicry in advancing UAV technology, suggesting that insights gained from the study of insect flight can lead to significant improvements in the design and functionality of autonomous aerial systems. The findings aim to contribute to the development of more agile and robust UAVs capable of operating effectively in diverse and challenging environments.

This research not only enhances the understanding of insect flight but also paves the way for innovative applications in aerial robotics, ultimately benefiting military and civilian UAV operations. The report is approved for public release, ensuring that its findings are accessible for further scientific and technical information exchange.

Top Stories

INSIDERRF & Microwave Electronics

![]() FAA to Replace Aging Network of Ground-Based Radars

FAA to Replace Aging Network of Ground-Based Radars

PodcastsDefense

![]() A New Additive Manufacturing Accelerator for the U.S. Navy in Guam

A New Additive Manufacturing Accelerator for the U.S. Navy in Guam

NewsSoftware

![]() Rewriting the Engineer’s Playbook: What OEMs Must Do to Spin the AI Flywheel

Rewriting the Engineer’s Playbook: What OEMs Must Do to Spin the AI Flywheel

Road ReadyPower

![]() 2026 Toyota RAV4 Review: All Hybrid, All the Time

2026 Toyota RAV4 Review: All Hybrid, All the Time

INSIDERDefense

![]() F-22 Pilot Controls Drone With Tablet

F-22 Pilot Controls Drone With Tablet

INSIDERRF & Microwave Electronics

![]() L3Harris Starts Low Rate Production Of New F-16 Viper Shield

L3Harris Starts Low Rate Production Of New F-16 Viper Shield

Webcasts

Energy

![]() Hydrogen Engines Are Heating Up for Heavy Duty

Hydrogen Engines Are Heating Up for Heavy Duty

Energy

![]() SAE Automotive Podcast: Solid-State Batteries

SAE Automotive Podcast: Solid-State Batteries

Power

![]() SAE Automotive Engineering Podcast: Additive Manufacturing

SAE Automotive Engineering Podcast: Additive Manufacturing

Aerospace

![]() A New Approach to Manufacturing Machine Connectivity for the Air Force

A New Approach to Manufacturing Machine Connectivity for the Air Force

Software

![]() Optimizing Production Processes with the Virtual Twin

Optimizing Production Processes with the Virtual Twin