Universal Sparse Modeling

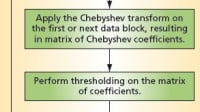

Sparse data models, where data is assumed to be well represented as a linear combination of a few elements from a dictionary, have gained considerable attention in recent years, and their use has led to state-of-the-art results in many signal and image processing tasks. Sparse modeling calls for constructing a succinct representation of some data as a combination of a few typical patterns (atoms) learned from the data itself.

A critical component of sparse modeling is the actual sparsity of the representation, which is controlled by a regularization term (regularizer, for short) and its associated parameters. The choice of the functional form of the regularizer and its parameters is a challenging task. Several solutions to this problem have been proposed, ranging from the automatic tuning of the parameters to Bayesian models, where these parameters are themselves considered as random variables.

A paper addresses this challenge by proposing a family of regularizers that is robust under the choice of their parameters. These regularizers are derived using tools from information theory; more specifically, from universal coding theory. The main idea is to consider sparse modeling as a code length minimization problem, where the regularizers define, through a probability assignment model, the code length associated with the description of the sparse representation coefficients.

Also included is the introduction of tools from universal modeling into the sparse world, which brings a fundamental and well supported theoretical angle to this very important and popular area of research. The remainder of this paper covers the standard framework of sparse modeling, the derivation of the proposed universal modeling framework and its implementation, and experimental results showing the practical benefits of the proposed framework for image representation and classification.

This work was done by Ignacio Ramirez and Guillermo Sapiro of the University of Minnesota. UMINN-0001

This Brief includes a Technical Support Package (TSP).

Universal Sparse Modeling

(reference UMINN-0001) is currently available for download from the TSP library.

Don't have an account?

Overview

The document "Universal Sparse Modeling" by Ignacio Ramírez and Guillermo Sapiro presents a comprehensive framework for sparse data modeling, emphasizing the importance of sparsity in representing data efficiently. Sparse modeling involves constructing a representation of data as a linear combination of a few elements, or "atoms," drawn from a learned dictionary. This approach has gained significant traction in signal and image processing, yielding state-of-the-art results in various applications.

The authors highlight that the choice of the sparsity regularization term is crucial for the success of sparse models. Traditional regularization methods, such as the 0 and 1 norms, are discussed, but the paper proposes a novel framework utilizing tools from information theory, particularly universal coding theory. This new approach aims to design sparsity regularization terms that offer both theoretical and practical advantages over conventional methods, leading to improved performance in coding, reconstruction, and classification tasks.

The paper also addresses the challenges associated with selecting the appropriate functional form of the regularizer and its parameters, which is critical for effective sparse modeling. The authors provide theoretical foundations for their proposed framework and illustrate its application through examples in image denoising and classification, demonstrating the practical benefits of their approach.

Additionally, the document discusses the significance of low mutual coherence and the Gram matrix norm in enhancing the learned dictionaries, which further contributes to the effectiveness of the sparse modeling process. The authors emphasize that their framework not only improves coding performance but also facilitates straightforward and rapid parameter estimation.

Future work is suggested to explore the design of priors that account for the nonzero mass at zero in overcomplete models and to investigate online learning of model parameters from noisy data. The authors express their intention to apply their framework for model selection using the Minimum Description Length (MDL) principle.

Overall, the document presents a significant advancement in the field of sparse modeling, combining theoretical insights with practical applications, and sets the stage for future research directions that could further enhance the capabilities of sparse data representation in various domains.

Top Stories

INSIDERManufacturing & Prototyping

![]() How Airbus is Using w-DED to 3D Print Larger Titanium Airplane Parts

How Airbus is Using w-DED to 3D Print Larger Titanium Airplane Parts

NewsAutomotive

![]() Microvision Aquires Luminar, Plans Relationship Restoration, Multi-industry Push

Microvision Aquires Luminar, Plans Relationship Restoration, Multi-industry Push

INSIDERAerospace

![]() A Next Generation Helmet System for Navy Pilots

A Next Generation Helmet System for Navy Pilots

INSIDERDesign

![]() New Raytheon and Lockheed Martin Agreements Expand Missile Defense Production

New Raytheon and Lockheed Martin Agreements Expand Missile Defense Production

ArticlesAR/AI

![]() CES 2026: Bosch is Ready to Bring AI to Your (Likely ICE-powered) Vehicle

CES 2026: Bosch is Ready to Bring AI to Your (Likely ICE-powered) Vehicle

Road ReadyDesign

Webcasts

Semiconductors & ICs

![]() Advantages of Smart Power Distribution Unit Design for Automotive...

Advantages of Smart Power Distribution Unit Design for Automotive...

Unmanned Systems

![]() Quiet, Please: NVH Improvement Opportunities in the Early Design...

Quiet, Please: NVH Improvement Opportunities in the Early Design...

Electronics & Computers

![]() Cooling a New Generation of Aerospace and Defense Embedded...

Cooling a New Generation of Aerospace and Defense Embedded...

Power

![]() Battery Abuse Testing: Pushing to Failure

Battery Abuse Testing: Pushing to Failure

AR/AI

![]() A FREE Two-Day Event Dedicated to Connected Mobility

A FREE Two-Day Event Dedicated to Connected Mobility