Developing Knowledge and Understanding for Autonomous Systems for Analysis and Assessment Events and Campaigns

An effort to overcome the challenges involved in developing complete performance ontologies and test methodologies for evaluating the performance of autonomous systems.

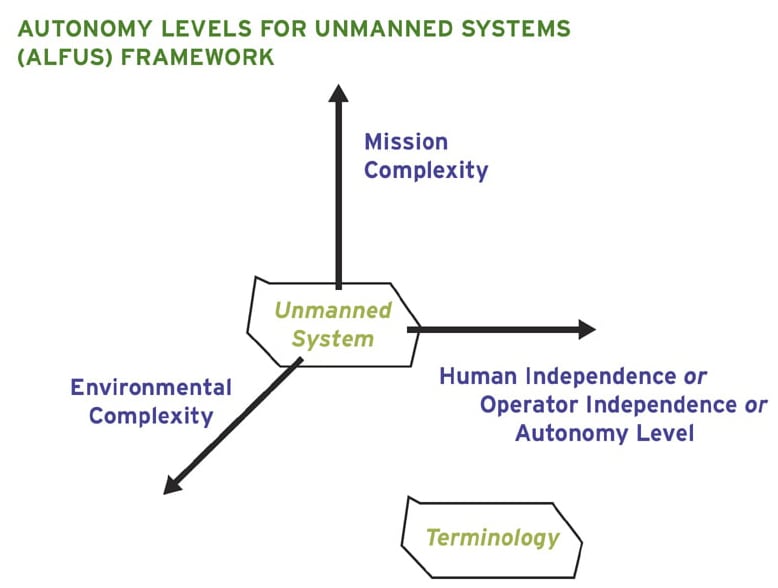

There are many challenges in developing complete performance ontologies and test methodologies to define and evaluate the performance of autonomous systems. Chief among them is the dynamic environment in which the autonomous system is expected to operate. Change in the autonomous system’s environment is expected to affect system performance. Test methodologies have to include all aspects of this dynamic environment.

Automation and autonomy offer significant military value in reducing danger to warfighters, in increasing the speed and accuracy of time-critical operations, and in reducing the supervisory burden of warfighters and control systems.

Autonomous systems can vary from simple adaptive automatic systems that are designed to operate in highly structured environments to fully self-governing systems designed to perform in highly dynamic and complex environments. Most Army autonomous systems are expected to range from automatic to semi-autonomous regimes.

Automated systems are systems that require little or no human involvement for performing well-defined tasks with predetermined responses. The system responses of automatic systems are generally rule-based and are designed to operate in well- structured environments with few parameters. Automated systems can be adaptive through the use of environmental sensors and rule-based adaptation. An example of an automatic system is a laundry machine that adapts to different laundry loads.

Autonomous systems are characterized as self-governing toward accomplishing their mission. System self-governance reduces the burden of control on the operator, allowing him or her to perform other tasks. Autonomous systems can be broadly classified by their level of autonomy:

Semi-Autonomous Systems perform limited control activities to react to changes in the environment. Automated and semi-autonomous systems overlap in well-structured environments. An example of a semi-autonomous system is a self-navigating vacuum cleaner that can recognize and maneuver around obstacles.

Nearly Full Autonomous Systems can perform many automated tasks, but the automatic functions are still activated or deactivated by an operator. These systems, when activated, can function without the control of an operator but lack some of the adaptability and decision making of a fully autonomous system. An example of a nearly full autonomous system is a self-driving car that can maneuver around obstacles, sense and interpret street signs and traffic lights, and choose the best course to its destination.

Fully Autonomous Systems require no human intervention to perform tasks, even in drastically changing environments. A fully autonomous system assesses the environment and adapts to it to complete its mission. An example of a fully autonomous system is a deep-space probe expected to complete its mission without communication from Earth.

An adaptive system can make changes in its performance according to its state, the environment it finds itself in, and a changing mission. Adaptive systems can range from being automatic to fully autonomous. Adaptive systems can be a set of discrete interacting, interdependent, real, or abstract entities that react together to environmental changes or changes in system status. A distributed adaptive system is a system in which an understanding of the individual parts does not necessarily convey an understanding of the whole system's behavior.

The modern battlefield has automated and various levels of autonomous systems working together in a complex, unstructured, operational environment. Future battlefields are expected to have an increased number of autonomous systems working together or independently on shared or independent missions.

This work was done by Jayashree Harikumar and Philip Chan for the Army CCDC Data & Analysis Center. ARL-0219

This Brief includes a Technical Support Package (TSP).

Developing Knowledge and Understanding for Autonomous Systems for Analysis and Assessment Events and Campaigns

(reference ARL-0219) is currently available for download from the TSP library.

Don't have an account?

Overview

The document titled "Developing Knowledge and Understanding for Autonomous Systems for Analysis and Assessment Events and Campaigns," authored by Jayashree Harikumar and Philip Chan, is a technical report published in February 2019 by the U.S. Army Combat Capabilities Development Command Data & Analysis Center. It focuses on the integration and application of autonomous systems in military analysis and assessment contexts.

The report emphasizes the growing importance of autonomous systems in modern warfare and military operations. It outlines the need for a comprehensive understanding of these systems to effectively analyze and assess their performance in various scenarios, including events and campaigns. The authors argue that as autonomous technologies evolve, so too must the methodologies and frameworks used to evaluate their effectiveness and reliability.

Key components of the report include a discussion on the challenges associated with the deployment of autonomous systems, such as the need for robust data collection, analysis techniques, and the development of metrics to assess system performance. The authors highlight the significance of interdisciplinary collaboration in advancing the knowledge base surrounding autonomous systems, suggesting that insights from fields such as artificial intelligence, robotics, and human factors engineering are crucial for successful implementation.

The report also addresses the ethical and operational implications of using autonomous systems in military contexts. It raises questions about decision-making processes, accountability, and the potential impact on human operators. The authors advocate for the establishment of guidelines and best practices to ensure that autonomous systems are used responsibly and effectively.

Furthermore, the document outlines a framework for developing knowledge and understanding of autonomous systems, which includes identifying key research areas, fostering collaboration among stakeholders, and promoting continuous learning and adaptation. The authors stress the importance of training personnel to work alongside these systems, ensuring that human operators are equipped to make informed decisions based on the data and insights provided by autonomous technologies.

In conclusion, the report serves as a foundational document for military and defense organizations seeking to harness the potential of autonomous systems. It provides a roadmap for future research and development efforts, emphasizing the need for a strategic approach to understanding and integrating these technologies into military operations. The findings and recommendations presented aim to enhance the effectiveness of autonomous systems in supporting analysis and assessment activities in defense contexts.

Top Stories

INSIDERRF & Microwave Electronics

![]() FAA to Replace Aging Network of Ground-Based Radars

FAA to Replace Aging Network of Ground-Based Radars

PodcastsDefense

![]() A New Additive Manufacturing Accelerator for the U.S. Navy in Guam

A New Additive Manufacturing Accelerator for the U.S. Navy in Guam

NewsSoftware

![]() Rewriting the Engineer’s Playbook: What OEMs Must Do to Spin the AI Flywheel

Rewriting the Engineer’s Playbook: What OEMs Must Do to Spin the AI Flywheel

Road ReadyPower

![]() 2026 Toyota RAV4 Review: All Hybrid, All the Time

2026 Toyota RAV4 Review: All Hybrid, All the Time

INSIDERDefense

![]() F-22 Pilot Controls Drone With Tablet

F-22 Pilot Controls Drone With Tablet

INSIDERRF & Microwave Electronics

![]() L3Harris Starts Low Rate Production Of New F-16 Viper Shield

L3Harris Starts Low Rate Production Of New F-16 Viper Shield

Webcasts

Energy

![]() Hydrogen Engines Are Heating Up for Heavy Duty

Hydrogen Engines Are Heating Up for Heavy Duty

Energy

![]() SAE Automotive Podcast: Solid-State Batteries

SAE Automotive Podcast: Solid-State Batteries

Power

![]() SAE Automotive Engineering Podcast: Additive Manufacturing

SAE Automotive Engineering Podcast: Additive Manufacturing

Aerospace

![]() A New Approach to Manufacturing Machine Connectivity for the Air Force

A New Approach to Manufacturing Machine Connectivity for the Air Force

Software

![]() Optimizing Production Processes with the Virtual Twin

Optimizing Production Processes with the Virtual Twin