Ensuring the Compliance of Avionics Software with DO-178C

Given the needs to meet the most stringent requirements for reliability, safety, and security resulting in lengthy software development schedules, aerospace and defense projects have become among the most challenging to complete. In response to the increasing size and complexity of software used in airborne systems, the guidance document for certifying such systems has gone through numerous revisions with the latest being DO-178C.

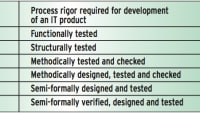

Table 2-1 of the document dictates that for a system to be compliant, it has to be assigned one of five failure condition categories, proportionate to the hazard associated with system failure. Severity ranges from “Catastrophic”, which could involve multiple fatalities and the loss of the aircraft, to “No Effect” on safety. Each of these categories is mapped to an associated Design Assurance Level (DAL) from A (Catastrophic) to E (No Effect), such that the DAL assigned to a system is proportionate to the level of quality assurance required in its production. Objectives are detailed for each DAL throughout the development process including requirements specification, design, coding, life cycle traceability, and verification. Thorough, robust testing is a must, and a comprehensive suite of analysis, test and traceability tools is similarly essential.

DO-178C requires that the source code is written in accordance with a set of rules (or “coding standard”) that can be analyzed, tested, and verified for compliance. It does not specifically require a particular standard, but does require a programming language with unambiguous syntax and clear control of data with definite naming conventions and constraints on complexity. The most popular coding standards are MISRA C and MISRA C++, which now include guidelines for software security, but there are alternatives including the JSF++ AV standard, used on the F-35 Joint Strike Fighter and beyond. The Ada language has its own coding standards such as SPARK and the Ravenscar profile, both subsets designed for safety-critical hard real-time computing.

The static analysis of source code may be thought of as an “automated inspection”, as opposed to the dynamic test of an executable binary derived from that source code. The use of a static analysis tool will ensure adherence of source code to the specified coding standard and hence meet that DO-178 objective. It will also provide the means to show that other objectives have been met by analyzing things like the complexity of the code, and deploying data flow analysis to detect any uninitialized or unused variables and constants.

Verification Beyond Coding Standards

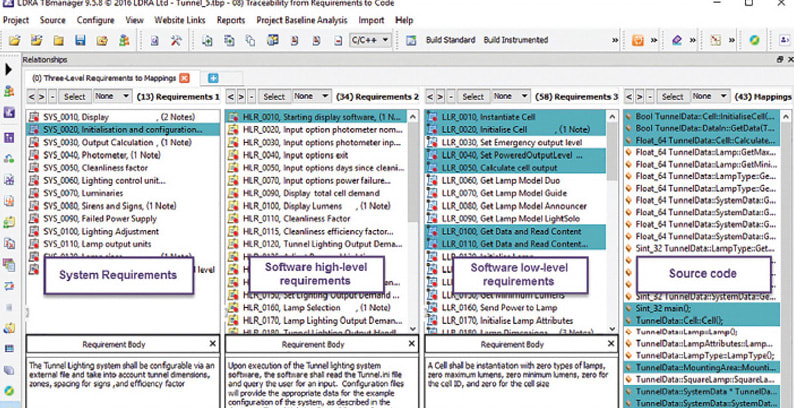

As well as providing these important metrics on the nature of the source code, detailed and comprehensive static analysis also ensures that the code base is such that other tools can make a similar contribution to the development of critical avionics systems that are certifiably reliable, safe, and secure. For example, DO-178C mandates a requirements-based development and verification process that incorporates bi-directional requirements traceability across the lifecycle. High-level requirements must be traceable to low-level requirements and ultimately to source code, and source code must be traceable back up to low- and high-level requirements (Figure 1). Source code written in accordance with coding standards will generally lend itself to such mapping.

Static analysis also establishes an understanding of the structure of the code and the data, which is not only useful information in itself but also an essential foundation for the dynamic analysis of the software system, and its components. In accordance with DO-178C, the primary thrust of dynamic analysis is to prove the correctness of code relating to functional safety (“functional tests”) and to therefore show that the functional safety requirements have been met. Given that compromised security can have safety implications, functional testing will also demonstrate robust security, perhaps by means of simulated attempts to access control of a device, or by feeding it with incorrect data that would change its mission. Functional testing also provides evidence of robustness in the event of the unexpected, such as illegal inputs and anomalous conditions.

Structural Coverage Analysis (SCA) is another key DO-178C objective, and involves the collation of evidence to show which parts of the code base have been exercised during test. The verification of requirements through dynamic analysis provides a basis for the initial stages of structural coverage analysis. Once functional test cases have been executed and passed, the resulting code coverage can be reviewed, revealing which code structures and interfaces have not been exercised during requirements based testing. If coverage is incomplete, additional requirements-based tests can be added to completely exercise the software structure. Additional low-level requirements-based tests are often added to supplement functional tests, filling in gaps in structural coverage whilst verifying software component behavior.

SCA also helps reveal “dead” code that cannot be executed regardless of what inputs are provided for test cases. There are many reasons why such code may exist, such as errors in the algorithm or perhaps the remnants of a change of approach by the coder, but none is justified from the perspective of DO-178C and must be addressed. Inadvertent execution of untested code poses significant risk.

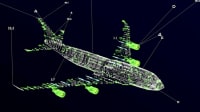

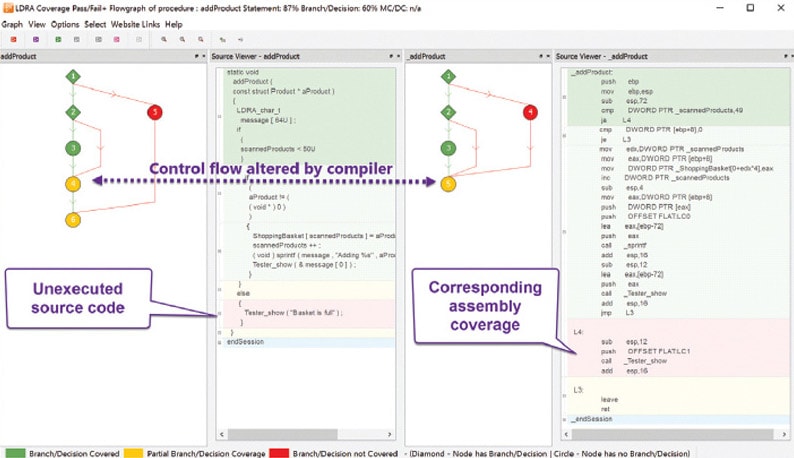

The structure and control flow of object code generated by compilers is often not directly traceable to source code statements. For the most demanding DAL A projects, DO-178C requires additional verification to ensure the correctness of the object code elements which do not directly map to the source code, and it is helpful to have tools that can highlight such elements. Because there is a direct one-to-one relationship between object code and assembly code, one way for a tool to represent this is to display a graphical representation of the source code alongside the equivalent representation of the assembly code (Figure 2).

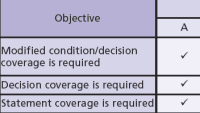

On the surface, coverage analysis might seem as simple as assessing whether all the statements have been exercised, but of course it is not quite so simple. Merely checking to see if every line in the code has been executed does not reveal the structure of execution and does not clearly relate back to the requirements. We must turn to branch/decision testing and its cousin, Modified Condition/Decision Coverage (MC/DC). Adequate MC/DC coverage requires the invocation of sufficient combinations of conditions applicable to a decision to demonstrate that each of those conditions can independently affect the outcome.

Control Coupling and Data Coupling

Some parts of DO-178C are more demanding than the equivalent sections in earlier versions. For example, in the cases of data and control flow, it now requires testing to confirm the dynamic exercising of the coupling, rather than just providing static evidence of it. This requires the dynamic analysis of the code, with quantifiable measurement of the results to confirm that they meet requirements.

DO-178C defines control coupling as, “the manner or degree by which one software component influences the execution of another software component.” In other words, a given routine can be called by more than one other component under different conditions so it is not enough to know that the routine has run, but where the call came from and why. Thus, not only must all potential components be called, but all possible calls to each component must be examined.

DO-178C also requires the examination of each software component that is dependent on data not exclusively under the control of that component. For example, suppose there is a routine to display airspeed, and that the data needed for that display is produced by a separate routine that calculates the airspeed. That data may be available not only to the “display” routine, but to other routines in the system as well. In order to ensure that the correct airspeed is displayed, the routine to calculate it must execute before the display routine. One way to accomplish this is for the “display” routine to invoke the “calculate” routine before executing the display function. In this situation, the “display” and “calculate” routines together represent an example of a set/use pair, the implementation of which can be verified by data coupling analysis.

Keeping Up with Technology

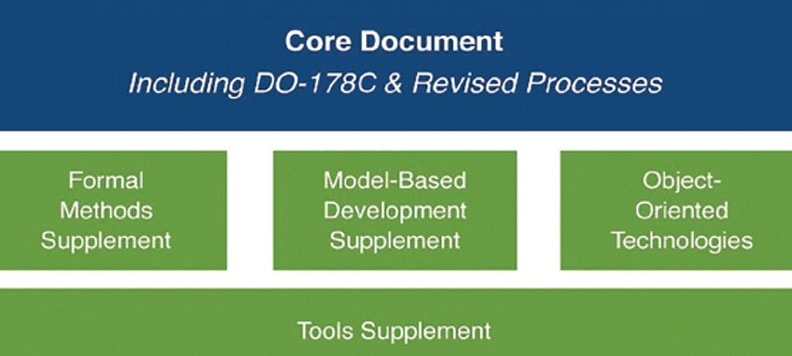

In addition to the formal methods discussed here, DO-178C has added two more technology-specific “legs.” They are to include model-based development and object-oriented technologies, as well as a tools supplement to support them (Figure 3).

Model-based development (MBD), such as the Sparx environment, works at higher levels of abstraction than source code, providing one means of coping with the enormous growth of software in airborne systems and equipment. Research indicates that early-stage prototyping of software requirements using an executable model effectively routs out “defects” at the requirements and design levels, a huge saving step. With MBD, it is also possible to automatically generate source code from the executable model. Although the key to verification remains traceability from high-level requirements to model-based requirements, DO-178C simply considers the models to be the requirements so traceability becomes self-evident.

The challenges of auto-generated and manually inserted code when using MBD are more significant, however. MBD tools might also insert code that can be rationalized only in the context of the model’s implementation, not the functional requirements. Still, whether code is written from textual requirements, from design models or auto-generated from a tool, tried and tested practices of coding standards adherence are still applicable, and the verification of the executable object code is still primarily performed by testing.

The Object-Oriented Technology (OOT) “leg” of DO-178C focuses on languages such as C++, Java, and Ada 2005. For example, in the world of object orientation, “subtyping” is the ability to create new types or subtypes in an OO language. DO-178C addresses the issue of subtype verification for the safe use of OOT, which was not dealt with in DO-178B. DO-178C requires verification of local type consistency as well as verifying the proper use of virtual memory.

Design Verification Testing (DVT) performed at the class level proves that all the member functions conform to the class contract with respect to preconditions, post conditions, and invariants of the class state. Thus, a class and its methods needs to pass all tests for any superclass for which it can be substituted. As an alternative to DVT, developers can use formal methods in conformance with the formal methods supplement.

In some senses, complexity may be viewed as the enemy of safety, but the growing complexity and software dependence of modern avionics systems cannot be denied. The best guarantee of maintaining the industry’s exemplary safety record in airborne systems and equipment must be to deploy tools that can manage complex details in ways that developers can readily understand and control. The DO-178C document provides developers of avionic systems with a standard that accommodates new technologies, and embraces the use of appropriate tools such that compliance can be achieved cost effectively.

This article was written by Shan Bhattacharya, Director of Business Development, and Mark Pitchford, Technical Specialist, LDRA (Wirral, Merseyside,UK). For more information, Click Here .

Top Stories

INSIDERRF & Microwave Electronics

![]() FAA to Replace Aging Network of Ground-Based Radars

FAA to Replace Aging Network of Ground-Based Radars

PodcastsDefense

![]() A New Additive Manufacturing Accelerator for the U.S. Navy in Guam

A New Additive Manufacturing Accelerator for the U.S. Navy in Guam

NewsSoftware

![]() Rewriting the Engineer’s Playbook: What OEMs Must Do to Spin the AI Flywheel

Rewriting the Engineer’s Playbook: What OEMs Must Do to Spin the AI Flywheel

Road ReadyPower

![]() 2026 Toyota RAV4 Review: All Hybrid, All the Time

2026 Toyota RAV4 Review: All Hybrid, All the Time

INSIDERDefense

![]() F-22 Pilot Controls Drone With Tablet

F-22 Pilot Controls Drone With Tablet

INSIDERRF & Microwave Electronics

![]() L3Harris Starts Low Rate Production Of New F-16 Viper Shield

L3Harris Starts Low Rate Production Of New F-16 Viper Shield

Webcasts

Energy

![]() Hydrogen Engines Are Heating Up for Heavy Duty

Hydrogen Engines Are Heating Up for Heavy Duty

Energy

![]() SAE Automotive Podcast: Solid-State Batteries

SAE Automotive Podcast: Solid-State Batteries

Power

![]() SAE Automotive Engineering Podcast: Additive Manufacturing

SAE Automotive Engineering Podcast: Additive Manufacturing

Aerospace

![]() A New Approach to Manufacturing Machine Connectivity for the Air Force

A New Approach to Manufacturing Machine Connectivity for the Air Force

Software

![]() Optimizing Production Processes with the Virtual Twin

Optimizing Production Processes with the Virtual Twin