Data Fusion of Geographically Dispersed Information for Test and Evaluation

A scalable data grid enables data to be available in a timely manner for test and evaluation applications.

Test and evaluation relies on computer collection and manipulation of data from a myriad of sensors and sources. More computing power allows for increases in the breadth and depth of the information collected. The same computing power must assist in identifying, ordering, storing, and providing easy access to that data. Fast networking allows large clusters of high-performance computing resources to be brought to bear on test and evaluation. This increase in fidelity has correspondingly increased the volumes of data that tests are capable of generating.

A distributed logging system was developed to capture publish-and-subscribe messages from the high-level architecture (HLA) simulation federation. In addition to the scalable data grid approach, it was found that the Hadoop distributed file system provided a scalable, but conceptually simple, distributed computation paradigm that is based on map–reduce operations implemented over a highly parallel, distributed file system. Hadoop is an open-source system that provides a reliable, fault-tolerant, distributed file system and application programming interfaces. These enable its map-reduce framework for the parallel evaluation of large volumes of test data.

The simplicity of the Hadoop programming model allows for straightforward implementations of many evaluation functions. The popular Java applications have the most direct access, but Hadoop also has streaming capabilities that allow for implementations in any preferred language.

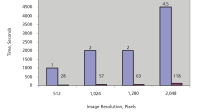

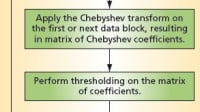

Map–reduce implementations of k-means and expectation maximization data mining algorithms were developed to take advantage of the Hadoop framework. This file system dramatically reduced the disk scan time needed by the iterative data mining algorithms. It was found that these algorithms could be effectively implemented across multiple Linux clusters connected over reserved high-speed networks. The data transmission reductions observed should be applicable in most test and evaluation situations, even those that use lower bandwidth communications.

For this analysis, Hadoop jobs were created to experiment with the data mining performance characteristics in an environment that was based on connections to sites across widely dispersed geographic regions. All of these machines had large disk storage configurations, were located on network circuits capable of 10-Gb/s transmission, and remained dedicated to this research. One machine served as a control.

The logged data collected from a test is often at too low a level to be of direct use to the test and evaluation analysts. Information needs to be abstracted from the logged data by collation, aggregation, and summarization. To perform this data transformation, an analysis data model had to be defined that is suitable for analysts. A multidimensional data model was used as a way of representing the information. Next, a logging data model representing the collected data had to be defined.

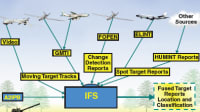

To bridge the gap and connect two data models, an abstraction relationship was defined that mapped the logging model to the analysis model. This was a part of the scalable data grid toolkit. A sensor–target scoreboard was developed that provided a visual way of quickly comparing the relative effectiveness of individual sensor platforms and sensor modes against different types of targets. The sensor–target scoreboard was a specific instance of the more general multidimensional analysis. Such a scoreboard is an example of two dimensions of a multi-dimensional cube. Its two dimensions can be the sensor dimension and target. One can imagine extending the scoreboard to take into account weather conditions and time of day. This would add two more dimensions to form a four-dimensional cube.

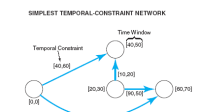

The analysis data model consists of two key concepts: dimensions of interest and measures of performance. Dimensions are used to define the coordinates of multidimensional data cubes. The cells within this data cube are the measure values. Dimensions categorize and partition the data along lines of interest to the analysts. Defining multiple crosscutting dimensions aids in breaking the data into smaller, orthogonal subsets. Dimensions have associated measurement units, or coordinates. Choosing the granularity of these units aids in determining the size of the subsets. For example, depending on the dynamic nature of the phenomenon that the analysts are trying to study, they may choose to define the time dimension units in terms of minutes, days, weeks, or years.

Hierarchical dimensions define how a coordinate relates to other coordinates as its subset. It serves to group together similar units from the analyst’s perspective. By defining hierarchical dimensions, analysts inform the system about how to aggregate and summarize information. For example, the analysts may want to subdivide the sensor platform category into the sensor modes: moving target indicators, synthetic aperture radar, images, video, and acoustic.

In addition to the data mining jobs developed, the ability of Hadoop to load and store data was tested. A simple data load of six 1.2-GB files was performed using the default settings, each block of data replicated on three nodes. The quickest data loads were with the local nodes configuration. The actual processing times were not that much different for each configuration. The major difference was in clock time, indicating that the distributed systems spent significant time in suspended wait states while the network subsystems performed their functions.

This work was done by Ke-Thia Yao, Craig E. Ward, and Dan M. Davis of the Information Sciences Institute at the University of Southern California for the Air Force Research Laboratory. AFRL-0197

This Brief includes a Technical Support Package (TSP).

Data Fusion of Geographically Dispersed Information for Test and Evaluation

(reference AFRL-0197) is currently available for download from the TSP library.

Don't have an account?

Overview

The document titled "Data Fusion of Geographically Dispersed Information: Experience With the Scalable Data Grid" discusses the challenges and solutions related to managing and analyzing large volumes of data in test and evaluation (T&E) scenarios, particularly in military contexts. The authors, Ke-Thia Yao, Craig E. Ward, and Dan M. Davis from the Information Sciences Institute at USC, highlight the increasing complexity of data collection and the necessity for efficient data processing systems.

As T&E professionals face the distribution of data over significant distances, traditional methods of data analysis become inadequate. The document emphasizes the need for a scalable and efficient system to handle the vast amounts of data generated by modern sensors and simulations. The authors describe their development of a distributed logging system that captures publish-and-subscribe messages from high-level architecture (HLA) simulation federations, which logged over a terabyte of data during a two-week exercise.

A key focus of the document is the use of Apache Hadoop, which provides a scalable and conceptually simple distributed computation paradigm based on map-reduce operations. This framework allows for the effective implementation of data mining algorithms, such as k-means and expectation-maximization, across multiple Linux clusters. The authors note that Hadoop's distributed file system (HDFS) significantly reduces disk scan times, enhancing the performance of iterative data mining processes.

The document also discusses the architecture of a scalable data grid that organizes data for rapid retrieval, ensuring that it is available in a timely manner and securely stored. This system is designed to facilitate data access and manipulation, making it suitable for various T&E situations, even those with lower bandwidth communications.

In conclusion, the authors advocate for the adoption of scalable data grid approaches in T&E environments, emphasizing their potential to improve data management and analysis. By leveraging advanced computing resources and efficient data processing frameworks like Hadoop, T&E professionals can better handle the complexities of modern data environments, ultimately leading to more informed decision-making and enhanced operational effectiveness.

Top Stories

INSIDERMaterials

![]() How Airbus is Using w-DED to 3D Print Larger Titanium Airplane Parts

How Airbus is Using w-DED to 3D Print Larger Titanium Airplane Parts

NewsSensors/Data Acquisition

![]() Microvision Aquires Luminar, Plans Relationship Restoration, Multi-industry Push

Microvision Aquires Luminar, Plans Relationship Restoration, Multi-industry Push

INSIDERDesign

![]() A Next Generation Helmet System for Navy Pilots

A Next Generation Helmet System for Navy Pilots

NewsManned Systems

![]() Accelerating Down the Road to Autonomy

Accelerating Down the Road to Autonomy

INSIDERWeapons Systems

![]() New Raytheon and Lockheed Martin Agreements Expand Missile Defense Production

New Raytheon and Lockheed Martin Agreements Expand Missile Defense Production

ArticlesDesign

![]() CES 2026: Bosch is Ready to Bring AI to Your (Likely ICE-powered) Vehicle

CES 2026: Bosch is Ready to Bring AI to Your (Likely ICE-powered) Vehicle

Webcasts

Transportation

![]() Advantages of Smart Power Distribution Unit Design for Automotive...

Advantages of Smart Power Distribution Unit Design for Automotive...

Automotive

![]() Quiet, Please: NVH Improvement Opportunities in the Early Design...

Quiet, Please: NVH Improvement Opportunities in the Early Design...

Electronics & Computers

![]() Cooling a New Generation of Aerospace and Defense Embedded...

Cooling a New Generation of Aerospace and Defense Embedded...

Power

![]() Battery Abuse Testing: Pushing to Failure

Battery Abuse Testing: Pushing to Failure

AR/AI

![]() A FREE Two-Day Event Dedicated to Connected Mobility

A FREE Two-Day Event Dedicated to Connected Mobility