Fusion of Image- and Inertial-Sensor Data for Navigation

Real-time image-aided inertial navigation is now feasible.

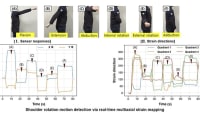

Amethod of real-time fusion of readout data from electronic inertial and image sensors for passive navigation has been developed. By "passive navigation" is meant navigation without the help of radar signals, Lidar signals, Global Positioning System (GPS) signals, or any other signals generated by on-board or external equipment. The concept of fusion of image- and inertial- sensor data for passive navigation is inspired by biological examples, including those of bees, migratory birds, and humans, all of which utilize inertial and imaging sensory modalities to pick out landmarks and navigate from landmark to landmark with relative ease. The present method is suitable for use in a variety of environments, including urban canyons and interiors of buildings, where GPS signals and other navigation signals are often unavailable or corrupted.

When used separately, imaging and inertial sensors have drawbacks that can result in poor navigation performance. A navigation system that uses inertial sensors alone relies upon dead reckoning, which is susceptible to drift over time. Image-sensing navigation sensors are susceptible to difficulty in identifying and matching good landmarks for navigation. The reason for fusing inertial- and image-sensor data is simply that they complement each other, making it possible to partly overcome the drawbacks of each type of sensor to obtain navigation results better than can be obtained from either type of sensor used alone.

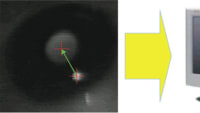

The present method is a successor to a prior method based on a rigorous theory of fusion of image- and inertial-sensor data for precise navigation. The theory involves utilization of inertial-sensor data in dead-reckoning calculations to predict locations, in subsequent images, of features identified in previous images to within a given level of statistical uncertainty. Such prediction reduces the computational burden by limiting, to a size reflecting the statistical uncertainty, the feature space that must be searched in order to match features in successive images. When this prior method was implemented in a navigation system operating in an indoor environment, the performance of the system was comparable to the performances of GPS-aided systems.

In the present method, the fusion of data is effected by an extended Kalman filter. To improve feature-tracking performance, a previously developed robust feature- transformation algorithm denoted the scale-invariant feature transform (SIFT) is used. The SIFT features are ideal for navigation applications because they are invariant to scale, rotation, and illumination. Unfortunately, there exists a correlation between complexity of features and computer processing time. Heretofore, this correlation has limited the effectiveness of SIFT-based and other robust feature-extraction algorithms for real-time applications using traditional microprocessor architectures. Despite recent advances in computer technology, the amount of information that can be processed by a computer is still limited by limitations on the power and speed of the central processing unit (CPU) of the computer.

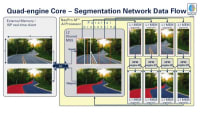

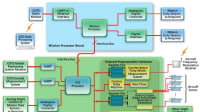

The present method is based partly on a theory that exploits the highly parallel nature of general programmable graphical processing units (GPGPU) in such a manner as to support deep integration of optical and inertial sensors for realtime navigation. The method leverages the existing OpenVIDIA core GPGPU software library and commercially available computer hardware to effect fusion of image- and inertial-sensor data. [OpenVIDIA is a programming framework, originally for computer vision applications, embodied in a software library and an application programming interface, that utilizes multiple graphics cards (GPUs) present in many modern computers to implement parallel computing and thereby obtain computational speed much greater than that of a CPU alone.] In this method, the OpenVIDIA library is extended to include the statistical feature- projection and feature-matching techniques of the predecessor datafusion method.

In an experimental system based on this method, data from inertial and image sensors were integrated on a commercially available laptop computer containing a programmable GPU. In application to experimental data collections, feature-processing speeds were found to be increased by factors of as much as 30 over those attainable by use of an equivalent CPU-based algorithm (see figure). Frame rates >10 Hz, suitable for navigation, were demonstrated. The navigation performance of this system was shown to be identical to that of an otherwise equivalent system, based on the predecessor method, that required lengthy postprocessing.

This work was done by J. Fletcher, M. Veth, and J. Raquet of the Air Force Institute of Technology for the Air Force Research Laboratory.

This Brief includes a Technical Support Package (TSP).

Fusion of Image- and Inertial-Sensor Data for Navigation

(reference AFRL-0084) is currently available for download from the TSP library.

Don't have an account?

Overview

The document presents research on the real-time fusion of image and inertial sensors for navigation, conducted by the Air Force Institute of Technology. The study addresses the limitations of traditional navigation systems, particularly in indoor environments where GPS signals may be weak or unavailable. By integrating optical and inertial sensors, the research aims to enhance navigation accuracy and reliability.

The authors developed a statistical transformation theory for feature space based on inertial sensor measurements, which constrains the feature correspondence search to a defined level of statistical uncertainty. This method improves feature tracking performance, making it suitable for precision navigation applications. The study employs the Scale-Invariant Feature Transform (SIFT) algorithm, known for its robustness against variations in scale, rotation, and illumination, which is critical for effective navigation.

A significant challenge in real-time applications is the computational demand of robust feature extraction algorithms. The document highlights the limitations of traditional microprocessor architectures in processing large amounts of data quickly. To overcome this, the research leverages the capabilities of General Programmable Graphics Processing Units (GPGPU), which allow for highly parallel processing. This approach enables real-time image-aided navigation, significantly improving processing speeds.

Experimental results demonstrate that the new processing method can achieve up to a 3000% improvement in feature processing speed compared to CPU-based algorithms. The system is capable of maintaining frame rates greater than 10 Hz, which is adequate for real-time navigation tasks. The performance of the new system is shown to be comparable to previous methods that relied on lengthy post-processing, thus enhancing the overall efficiency of navigation systems.

The document also discusses the categorization of real-time systems into hard and soft real-time systems, emphasizing that the proposed navigation system is best suited for soft real-time applications due to its reliance on concurrent data access from multiple sensors. The research concludes that the integration of optical and inertial sensors, supported by advanced processing techniques, represents a promising direction for improving navigation systems in various environments.

Overall, this work contributes to the field of navigation technology by providing a framework for real-time sensor fusion, demonstrating the potential of GPGPU technology in enhancing the performance of navigation systems.

Top Stories

INSIDERRF & Microwave Electronics

![]() FAA to Replace Aging Network of Ground-Based Radars

FAA to Replace Aging Network of Ground-Based Radars

PodcastsDefense

![]() A New Additive Manufacturing Accelerator for the U.S. Navy in Guam

A New Additive Manufacturing Accelerator for the U.S. Navy in Guam

NewsSoftware

![]() Rewriting the Engineer’s Playbook: What OEMs Must Do to Spin the AI Flywheel

Rewriting the Engineer’s Playbook: What OEMs Must Do to Spin the AI Flywheel

Road ReadyPower

![]() 2026 Toyota RAV4 Review: All Hybrid, All the Time

2026 Toyota RAV4 Review: All Hybrid, All the Time

INSIDERDefense

![]() F-22 Pilot Controls Drone With Tablet

F-22 Pilot Controls Drone With Tablet

INSIDERRF & Microwave Electronics

![]() L3Harris Starts Low Rate Production Of New F-16 Viper Shield

L3Harris Starts Low Rate Production Of New F-16 Viper Shield

Webcasts

Energy

![]() Hydrogen Engines Are Heating Up for Heavy Duty

Hydrogen Engines Are Heating Up for Heavy Duty

Energy

![]() SAE Automotive Podcast: Solid-State Batteries

SAE Automotive Podcast: Solid-State Batteries

Power

![]() SAE Automotive Engineering Podcast: Additive Manufacturing

SAE Automotive Engineering Podcast: Additive Manufacturing

Aerospace

![]() A New Approach to Manufacturing Machine Connectivity for the Air Force

A New Approach to Manufacturing Machine Connectivity for the Air Force

Software

![]() Optimizing Production Processes with the Virtual Twin

Optimizing Production Processes with the Virtual Twin