360-Degree Visual Detection and Target Tracking on an Autonomous Surface Vehicle

Multiple architectures provide situational awareness for safe operation of ASVs.

Operation of autonomous surface vehicles (ASVs) poses a number of challenges, including vehicle survivability for long-duration missions in hazardous and possibly hostile environments, loss of communication and/or localization due to environmental or tactical situations, reacting intelligently and quickly to highly dynamic conditions, re-planning to recover from faults while continuing with operations, and extracting the maximum amount of information from onboard and offboard sensors for situational awareness. Coupled with these issues is the need to conduct missions in areas with other possible adversarial vessels, including the protection of high-value fixed assets such as oil platforms, anchored ships, and port facilities.

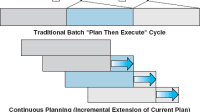

CARACaS is composed of a dynamic planning engine, a behavior engine, and a perception engine. The SAVAnT system is part of the perception engine, which also includes a stereo-vision system for navigation. The dynamic planning engine leverages the CASPER (Continuous Activity Scheduling Planning Execution and Replanning) continuous planner. Given an input set of mission goals and the autonomous vehicle’s current state, CASPER generates a plan of activities that satisfies as many goals as possible while still obeying relevant resource constraints and operation rules. CARACaS uses finite state machines for composition of the behavior network for any given mission scenarios. These finite state machines give it the capability of producing formally correct behavior kernels that guarantee predictable performance.

For the behavior coordination mechanism, CARACaS uses a method based on multiobjective decision theory (MODT) that combines recommendations from multiple behaviors to form a set of control actions that represents their consensus. CARACaS uses the MODT framework, coupled with the interval criterion weights method, to systematically narrow the set of possible solutions (the size of the space grows exponentially with the number of actions), producing an output within a time span that is orders of magnitude faster than a brute-force search of the action space.

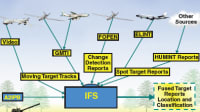

SAVAnT receives sensory input from an inertial navigation system (INS) and six cameras, which are mounted in weather-resistant casings, each pointed 60 degrees apart to provide 360-degree capability, with 5-degree overlap between each adjacent camera pair. The core components of the system software are as follows. The image server captures raw camera images and INS pose data and “stabilizes” the images (for horizontal, image-centered horizons). The contact server detects objects of interest (contacts) in the stabilized images and calculates absolute bearing for each contact. The OTCD server (object-level tracking and change detection) interprets series of contact bearings as originating from true targets or false positives, localizes target position (latitude/longitude) by implicit triangulation, maintains a database of hypothesized true targets, and sends downstream alerts when a new target appears or a known target disappears.

This work was done by Michael T. Wolf, Christopher Assad, Yoshiaki Kuwata, Andrew Howard, Hrand Aghazarian, David Zhu, Thomas Lu, Ashitey Trebi-Ollennu, and Terry Huntsberger of NASA’s Jet Propulsion Laboratory, California Institute of Technology, for the Office of Naval Research. ONR-0024

This Brief includes a Technical Support Package (TSP).

360-Degree Visual Detection and Target Tracking on an Autonomous Surface Vehicle

(reference ONR-0024) is currently available for download from the TSP library.

Don't have an account?

Overview

The document discusses advancements in autonomous surface vehicles (ASVs) focusing on a system called CARACaS (Cooperative Autonomous Robotic Agent for Command and Control). Developed by the Jet Propulsion Laboratory, CARACaS integrates various components to enhance the autonomy and operational capabilities of ASVs, particularly in maritime environments.

The paper outlines the architecture of the CARACaS system, which includes a dynamic planning engine, a behavior engine, and a perception engine. This architecture is designed to handle the uncertainties of dynamic sea operations, ensuring effective hazard detection, situational awareness, and compliance with maritime navigation rules. The system also facilitates cooperation among different vehicles, whether they are on the surface, underwater, or in the air.

A significant focus of the research is on the SAVAnT (Surface Autonomous Visual Analysis and Tracking) system, which is responsible for contact detection, target tracking, and change detection. The document details on-water experimental setups conducted in Virginia, where ASVs equipped with SAVAnT were tested in various scenarios. These tests aimed to evaluate the system's ability to recognize and track targets, such as a white boat used as a reference, under different conditions.

The results from these experiments demonstrated the effectiveness of the SAVAnT system in identifying and tracking targets over considerable distances, contributing to the development of an omnidirectional maritime perception system. This system is expected to improve the reliability and efficiency of ASV patrol operations, enabling them to operate autonomously for extended periods while ensuring safety and operational effectiveness.

The paper also acknowledges the support and funding received from the Office of Naval Research and Spatial Integrated Systems, Inc., highlighting the collaborative nature of the research. Overall, the document presents a comprehensive overview of the technological advancements in ASV autonomy, emphasizing the potential for enhanced maritime surveillance and operational capabilities through innovative detection and tracking systems. The findings contribute to the broader field of robotics and autonomous systems, showcasing the importance of integrating advanced technologies for real-world applications in maritime security and asset protection.

Top Stories

INSIDERRF & Microwave Electronics

![]() FAA to Replace Aging Network of Ground-Based Radars

FAA to Replace Aging Network of Ground-Based Radars

PodcastsDefense

![]() A New Additive Manufacturing Accelerator for the U.S. Navy in Guam

A New Additive Manufacturing Accelerator for the U.S. Navy in Guam

NewsSoftware

![]() Rewriting the Engineer’s Playbook: What OEMs Must Do to Spin the AI Flywheel

Rewriting the Engineer’s Playbook: What OEMs Must Do to Spin the AI Flywheel

Road ReadyPower

![]() 2026 Toyota RAV4 Review: All Hybrid, All the Time

2026 Toyota RAV4 Review: All Hybrid, All the Time

INSIDERDefense

![]() F-22 Pilot Controls Drone With Tablet

F-22 Pilot Controls Drone With Tablet

INSIDERRF & Microwave Electronics

![]() L3Harris Starts Low Rate Production Of New F-16 Viper Shield

L3Harris Starts Low Rate Production Of New F-16 Viper Shield

Webcasts

Energy

![]() Hydrogen Engines Are Heating Up for Heavy Duty

Hydrogen Engines Are Heating Up for Heavy Duty

Energy

![]() SAE Automotive Podcast: Solid-State Batteries

SAE Automotive Podcast: Solid-State Batteries

Power

![]() SAE Automotive Engineering Podcast: Additive Manufacturing

SAE Automotive Engineering Podcast: Additive Manufacturing

Aerospace

![]() A New Approach to Manufacturing Machine Connectivity for the Air Force

A New Approach to Manufacturing Machine Connectivity for the Air Force

Software

![]() Optimizing Production Processes with the Virtual Twin

Optimizing Production Processes with the Virtual Twin