Rewriting the Engineer’s Playbook: What OEMs Must Do to Spin the AI Flywheel

The automotive industry’s future hinges on a new AI-native engineering workflow that accelerates iteration, strengthens system thinking, and preserves human judgment.

Automotive development cycles are compressing at a pace the industry has never seen. The shift to all-electric fleets of software-defined vehicles is moving faster than traditional processes can absorb. In parallel, regulatory pressure and customer expectations keep rising, demanding greater performance, higher safety, better energy efficiency, and sharper competitiveness.

In this environment, OEMs R&D competitiveness depends on three factors:

- How quickly teams can explore and iterate on design choices while delivering differentiated value, product performance, and cost efficiency.

- How early system-level interactions can be detected, before they turn into delivery friction or costly late-stage failures.

- How effectively a company can encode and scale its internal engineering know-how into lean development processes.

On top of these pressures, AI is on every engineer’s mind. In two years, automotive OEMs and Tier-1 suppliers have largely increased their adoption of AI tools, driven by promises to navigate unprecedented complexity at unprecedented speed. Yet the industry is facing a paradox: despite heavy investment, measurable impact is lagging. Many initiatives stall at the pilot stage, unable to scale beyond isolated use cases or constrained demonstrations.

Why do most initiatives stall at the pilot stage?

Most organizations look at AI technologies as drop-in accelerators to replace existing steps in the complex vehicle engineering process. They do this without taking a step back to understand the nature of AI, which demands a different way of thinking and the courage to question processes that have grown organically into the “monster” workflows we know today.

This leads to a misalignment between AI capabilities and engineering goals, and creates the risk of a rapidly widening skill gap between engineering teams and the lightning-fast progress of available technologies.

To close this gap, automotive companies must rethink not only the tools they deploy but also the fundamentals of how engineering processes work from concept to validation. As this happens, we are witnessing the formation of a new engineering playbook: one that will determine which OEMs gain the agility, system-level insight, and speed required to win in the next decade.

From digital-first to quantitative design

Since the 1990s, CAD and CAE have transformed automotive design from paper drawings and physical tests into a largely digital development process. This trend, coupled with the complexity of these tools, resulted in businesses optimizing for scale and efficiency - layering tools, teams and processes in ways that solved immediate challenges but gradually fragmented the end-to-end engineering workflow. Today, AI represents the next inflection point, with an opportunity to bring agility and ownership back to the product development processes.

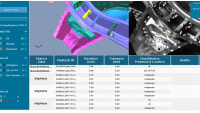

Real-time physics prediction is only a small part of the story. The fundamental shift lies in the simultaneous exploration of design alternatives and in bringing multidisciplinary, system-level insights into the development journey at earlier stages. AI is an opportunity to reshape how engineers think, reason, and collaborate on the path to a final product. The most frequent mistake is to expect too much from AI agents, and too little from human operators. Embedding AI copilots inside an integrated environment that blends design modifications, physics predictions, and process or manufacturing insights is one way to augment engineers ’judgment in real time. Such copilots can:

- Highlight trade-offs between disciplines,

- Surface alternative concepts to choose from,

- Suggest promising design modifications, and

- Provide answers to “what if?” scenarios on demand.

This shift is driving the rise of quantitative design: engineering workflows in which teams routinely generate, evaluate, and compare thousands (if not millions) of design variants per day, guided by AI feedback loops powered by automated design generation algorithms. The results can then be evaluated across multiple disciplines at once.

At Mahle for example, instead of iterating manually across CAD, simulations, and manufacturing constraints, engineers could navigate a rich design space of blower architectures that encoded both past experience and innovative concepts. Being able to validate performance and manufacturing compatibility in real time for thousands of designs gave them the freedom to generate a radically better product – a fundamental step change from previous baselines.

AI can fix digital engineering’s UX problem

To understand what AI changes, we must first acknowledge how complex engineering actually is. Highly specialized skills are required to design even the simplest part of a vehicle. An engineer must:

- Know how to design in 3D,

- Understand how parts can be manufactured with different techniques on different production lines, with associated cost and performance trade-offs,

- Make material choices that carry strategic supply-chain implications, and

- Anticipate how the part will behave in the real world, ensuring it performs as expected in use.

Today, this means being able to interpret complex requirements, translate them into highly specific instructions in CAD and CAE software, and build in-depth post-processing analyses before making a decision and moving on to the next concept.

The depth of specialization required is precisely why these activities and decisions are so fragmented across the organization. Teams progress at different paces, discover new issues independently, and must constantly re-adapt and share new insights through suboptimal communication channels. Lengthy, meeting-intensive iteration loops can drag the product development process over multiple years.

The radical efficiency improvement demanded by the market can only come from redefining development dynamics and massively de-fragmenting disciplines and decisions. In this regard, the history of Process Integration and Design Optimization (PIDO) software has attempted to reconcile disciplines in a data-driven manner. Sadly, no industrial company in the world relies on PIDO in the critical parts of its main product development process today.

The fundamental reason is simple. PIDO requires a superset of CAD, CAE, and pre/post-processing skills that are usually distributed across an entire team. Only a few people in the company can truly harness PIDO, so their impact remains limited at the scale of the organization.

Counter-intuitively, some of the most advanced engineering teams in the world today are also those relying the least on automation, because they can afford the very best engineers and fully trust their intuition. But what is ten times more powerful than the best engineer in the world? The best engineer in the world augmented with AI and real-time data-driven insights. So far, in fields like software development and computational biology, AI currently benefits top performers the most. Rather than lowering the accessibility of complex tasks, it tends to multiply the productivity of top performers.

The reason is that what is complex for AIs is not complex for humans, and vice versa. Humans are outstanding at abstract reasoning, spatial reasoning, intuition, and creativity. Today’s AI shines at high-throughput information retrieval, structured content generation, and automation within a well-established environment. The new wave of AI-first interface design blends the best of both, enabling organizations to harness superhuman powers and reduce friction across processes.

(Super-)human AI-powered processes

In manufacturing, the most efficient processes at scale generally require upfront investment. Similar dynamics apply to AI-powered processes. AI offers the opportunity to frontload work, leverage the best technical skills and know-how in the organization, and package them for scale in the form of AI copilots serving the whole company.

This unlocks a new R&D model in which the marginal cost of exploring additional concepts shrinks to zero. The process of making a design becomes the process of making a design space: engineers shift from focusing on a single 3D shape to defining rules and constraints for a concept, enabling the generation of thousands of variants that can be explored in record time.

They no longer iterate primarily on shapes. Instead, they invest their effort in defining requirements precisely, then instruct AI to iteratively explore evolving design spaces. As a result, a new role is emerging inside advanced OEMs and Tier 1 suppliers: the quantitative designer, who creates value not in making one design, but in shaping the landscape of thousands of possible ones.

Where individual designs correspond to fixed KPI values that reflect physical performance or manufacturing constraints, the quality of each design space translates into a full multi-dimensional Pareto front. From this front, countless design variants can be selected, exploring “what if?” scenarios iteratively, relaxing certain constraints in favor of specific performance objectives, tightening other constraints based on feedback from different teams.

Collapsing the V-cycle with a modern IT stack

This new engineering playbook is enabled by a new intelligence layer in the engineering software stack. It sits on top of the digital layer (CAD, CAE, and PLM software) to which it connects through bi-directional integrations. The intelligence layer is built as a flexible additional layer, unlocking rapid impact while the transformation of legacy systems moves at a slower pace, with the only near-term requirement being to expose minimal data exchange APIs. At this level, modern, modular application architectures can be easily adapted, allowing them to manage both environments with decoupled roadmaps.

By design, engineering work in the intelligence layer is fast, interconnected, and data-driven. As the system scales, these properties should drive IT decisions to enable workloads that span different environments: interconnected high-performance computing clusters, central serving infrastructure, user workstations, and elastic cloud infrastructure wherever the application allows it. Fully unlocking the impact of AI across organizations and disciplines requires intentional IT design where:

- Compute should not be a constraint, as human time is always more valuable.

- Data is traceable and actionable at scale, by humans and AI agents alike.

- Workloads and data follow transparent standards and remain modular, rather than being forced into large, constraining systems.

As these ingredients get reunited, the intelligence layer architecture spins an AI flywheel, where efficiency gains unlock new orders of magnitude in engineering data generation and increasingly intelligent systems.

The future of engineering will be defined by organizations that scale processes at the pace of AI

This accelerating synergy between engineers and AI marks a generational opportunity to redefine how innovation happens. In the near term, the priority for OEMs and their technical leadership is to demonstrate early wins and establish a clear plan to scale them. This means:

- Selecting 1–3 strategic processes or toolchains where AI-powered quantitative design can deliver immediate impact

- Auditing development workflows more broadly to quantify the cost of fragmentation across R&D activities and legacy toolchains.

- Combining domain expertise with AI know-how to put opportunities in perspective with efforts required to migrate from manual CAD iteration to quantitative design.

- Updating IT and PLM strategies with an AI-first perspective, ensuring architectures, data flows, and compute resources can support an intelligence layer that scales.

- Investing in workforce empowerment, training a new generation of AI builders who can create powerful workflows in the intelligence layer, and equipping quantitative designers with the ownership needed to drive system-level decisions across disciplines.

Théophile Allard is chief technology officer for Neural Concept and wrote this article for SAE Media.

Top Stories

INSIDERManufacturing & Prototyping

![]() How Airbus is Using w-DED to 3D Print Larger Titanium Airplane Parts

How Airbus is Using w-DED to 3D Print Larger Titanium Airplane Parts

INSIDERManned Systems

![]() FAA to Replace Aging Network of Ground-Based Radars

FAA to Replace Aging Network of Ground-Based Radars

NewsTransportation

![]() CES 2026: Bosch is Ready to Bring AI to Your (Likely ICE-powered) Vehicle

CES 2026: Bosch is Ready to Bring AI to Your (Likely ICE-powered) Vehicle

NewsSoftware

![]() Accelerating Down the Road to Autonomy

Accelerating Down the Road to Autonomy

EditorialDesign

![]() DarkSky One Wants to Make the World a Darker Place

DarkSky One Wants to Make the World a Darker Place

INSIDERMaterials

![]() Can This Self-Healing Composite Make Airplane and Spacecraft Components Last...

Can This Self-Healing Composite Make Airplane and Spacecraft Components Last...

Webcasts

Defense

![]() How Sift's Unified Observability Platform Accelerates Drone Innovation

How Sift's Unified Observability Platform Accelerates Drone Innovation

Automotive

![]() E/E Architecture Redefined: Building Smarter, Safer, and Scalable...

E/E Architecture Redefined: Building Smarter, Safer, and Scalable...

Power

![]() Hydrogen Engines Are Heating Up for Heavy Duty

Hydrogen Engines Are Heating Up for Heavy Duty

Electronics & Computers

![]() Advantages of Smart Power Distribution Unit Design for Automotive...

Advantages of Smart Power Distribution Unit Design for Automotive...

Unmanned Systems

![]() Quiet, Please: NVH Improvement Opportunities in the Early Design...

Quiet, Please: NVH Improvement Opportunities in the Early Design...