A Supercomputer for Aerospace and Defense Systems Modeling and Simulation

In February 2024, Cadence launched a new generation of computational fluid dynamics (CFD) with the introduction of the Millennium M1 CFD Supercomputer.

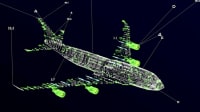

Millennium M1 is a graphics processor unit (GPU)-based hardware system that is also available with no hardware completely in the cloud. Cadence describes it as the industry’s first hardware/software (HW/SW) accelerated digital twin solutions for multi physics system design and analysis. Millennium M1 was developed using some of the latest accelerated compute technology available from NVIDIA, such as graphics processing units (GPUs), as well as near-linear scaling and up to 32,000 CPU-core equivalents that allows predictive CFD simulations to run ahead of production testing.

The system’s advanced architecture and cutting-edge technology make it a game-changer in the field, providing researchers and engineers with a powerful tool to tackle complex simulations and analyses.

What is a CFD supercomputer, how does it work and what could it provide for engineers and designers of complex aerospace and defense electronics and mechanical systems? Check out some of our Q&A session with Frank Ham, Vice President of Research and Development at Cadence, during his appearance on the Aerospace & Defense Technology podcast to answer these questions and more.

Aerospace & Defense Technology (A&DT): What is multi-physics simulation and how is it being used today in the aerospace and defense industry?

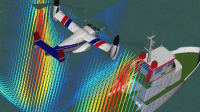

Frank Ham: Multi-physics simulation is essentially the simulation that involves multi, meaning two or more different physics-based modeling approaches, interacting together. So if we restrict the discussion to just CFD, a multi-physics simulation example would be something like fluid flow plus heat transfer.

That would be predicting the heating experienced by a high-speed vehicle, for example, or fluid flow and combustion being used to predict the exit temperature profile of a gas turbine engine. Another example would be fluid structure interaction. In aerospace, this would be used to predict the buffeting boundary at the edge of the flight envelope.

One last example that’s kind of fun is actually computing sound. Sound is part of the Navier-Stokes equations, the equations that are solved with CFD.

Nowadays, you can run a simulation of a plane or a drone and actually listen to it and get its noise signature. This early stage virtual prototyping is very valuable when you’re trying to make vehicles safer, more efficient, and quieter.

A&DT: What are some of the main software and hardware components that make Millenium M1 a CFD “supercomputer” as Cadence describes it?

Ham: One of the longstanding challenges with multi-physics simulation and particularly multi-physics CFD is that it’s computationally costly. It’s traditionally required large supercomputers with many thousands of CPU cores and in some cases many days to complete even a single simulation.

But modern GPUs, so that is graphics processing units, and the accelerated computing paradigm are changing all of this. Using GPUs and software that is specially designed to run on GPUs, multi-physics CFD can be sped up by at least an order of magnitude or more. And with much lower power consumption as well.

This can turn a week of simulation into a day, or a day into a couple of hours. This is what’s transforming the impact of multi-physics CFD on design in many industries. For a company that’s currently using more traditional approaches in simulation, I would say there are two big challenges with realizing the benefits of this accelerated computing.

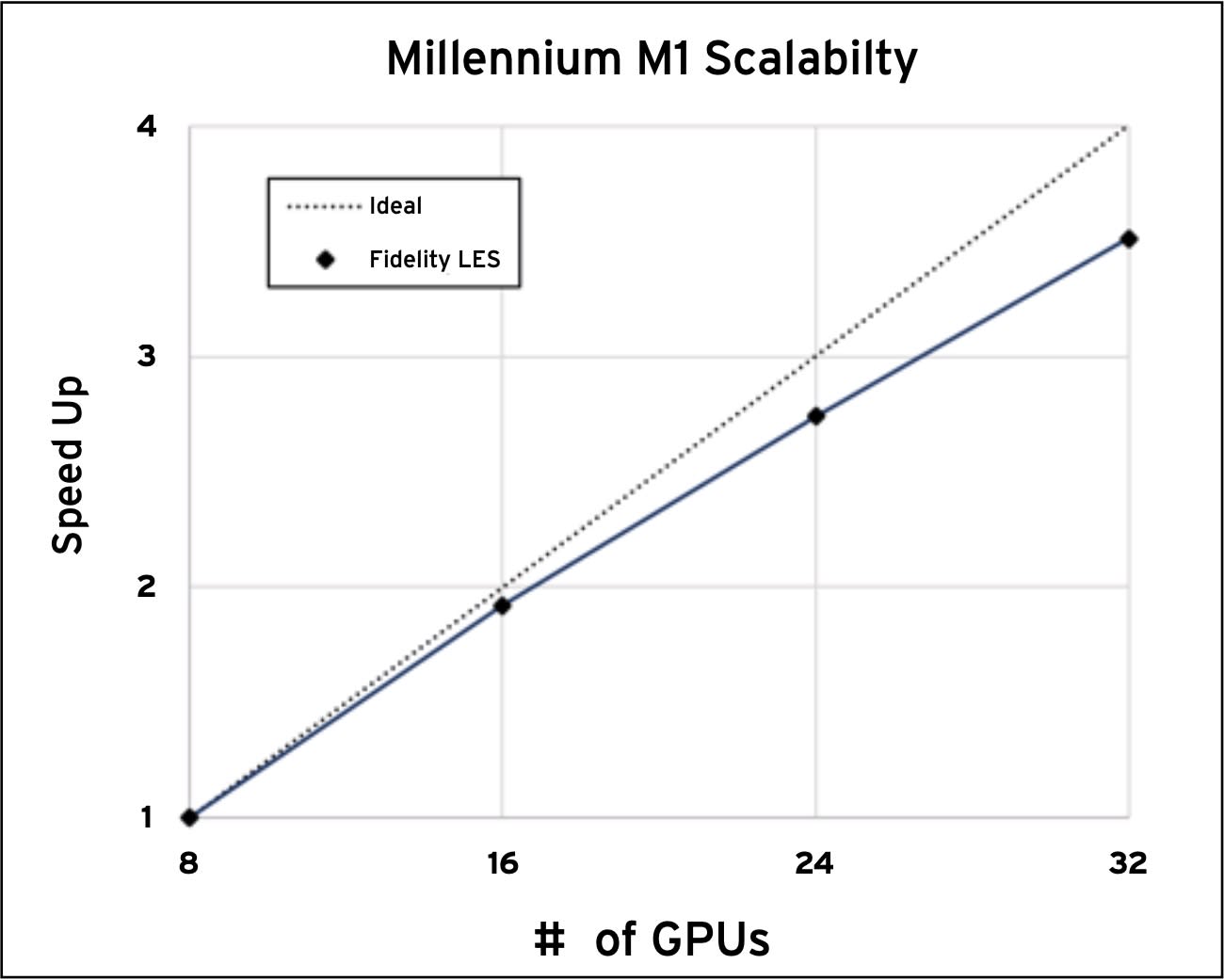

The first is of course, that the software architecture has to change. It must be specially designed, to be resident on the GPU, to run with the GPUs. The Fidelity Large Eddy Simulation Solver was one of the first CFD solvers to actually take this leap many years ago.

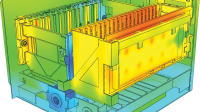

The second challenge for a company would be to get consistent access to cost-effective GPU hardware, specifically optimized for simulation. To address that second challenge, Cadence created Millennium. Millennium is a hardware software solution that combines GPU-resident solvers, CFD solvers currently, with hardware that’s been co-optimized with the major hardware OEMs to deliver a turnkey, scalable, and secure solution for CFD.

For certain types of high-fidelity CFD, a single Millennium box that you can bring into your data center and plug in has the equivalent throughput of something like 32,000 cores. That’s why we call it a supercomputer. Even though its footprint is relatively small, it has the throughput equivalent of an enormous compute infrastructure.

Further than that, multiple boxes can easily be networked together. Regarding your question just about the impact of Millennium and accelerated computing on CFD, there’s really two directions that the companies that we work with are exploring. The first is just to do more simulation than they currently do faster.

This produces more data and can feed optimization and even build AI models that rely on large amounts of data for training. The other direction to go when you get an order of magnitude increase in compute is to go after simulations, typically high fidelity simulations that were previously out of reach because they were cost prohibitive. A really good example in aerospace here is predicting aircraft stall at a high angle of attack.

It’s a really difficult problem when the plane pitches up, predicting when the flow starts to separate from the wings and lift falls off has been challenging for traditional CFD approaches at any scale. High fidelity approaches enabled with hardware like Millennium are enabling companies to predict this accurately and robustly and to complement their testing in flight testing and wind tunnel testing.

A&DT: In the U.S., the Department of Defense has embraced a shift to the concept of “digital engineering” within its acquisition process for new technologies. As an example, in May 2024, the U.S. Army published a memorandum outlining its focus on shifting from the traditional “design, build, test” method toward a “model, analyze, build” approach. How could a CFD supercomputer help enable this shift?

Ham: The word that really stands out to me there is shift. Inside Cadence, we like to use the term “shift left.” It refers to the early integration of more model-based engineering in the design process. We, of course, believe that shift left can offer incredible value in both design and sustainment, maturation of products, as more simulation is integrated into the decision-making process earlier and all the way along.

Now it’s easy for me to say that. But if this shift is so valuable, why hasn’t everybody already jumped in?

I think the reasons are fairly straightforward, and Millennium certainly helps to address some of them. First of all, there is an issue with simulation of accuracy.

Simulations are not mother nature. They’re just virtual versions of mother nature, and they’re imperfect. But they need to be sufficiently accurate and trustworthy to be actionable.

And in many cases, traditional CFD has not been. But high fidelity methods enabled by accelerated computing platforms like Millennium have the potential to change this. And that’s in fact being demonstrated by some of the customers using this approach.

For other simulations, it’s not just accuracy that was the issue, but also throughput. Throughput was simply insufficient to properly explore the design space. So in this case, Millennium, with its dramatic increase in throughput for even traditional methods, helps to address this bottleneck.

And as I mentioned previously, this more rapid production of data can support the training needs of data-driven models, or AI models, and further improve the design and optimization process all through the life cycle by building real-time digital twins fully capable of assimilating data from many sources and following products. So I think it’s not a panacea, but Millennium addresses these two really important issues with this shift that has to occur.

For more information, visit here .

Listen to this podcast with Frank Ham

Top Stories

INSIDERManufacturing & Prototyping

![]() How Airbus is Using w-DED to 3D Print Larger Titanium Airplane Parts

How Airbus is Using w-DED to 3D Print Larger Titanium Airplane Parts

INSIDERManned Systems

![]() FAA to Replace Aging Network of Ground-Based Radars

FAA to Replace Aging Network of Ground-Based Radars

NewsTransportation

![]() CES 2026: Bosch is Ready to Bring AI to Your (Likely ICE-powered) Vehicle

CES 2026: Bosch is Ready to Bring AI to Your (Likely ICE-powered) Vehicle

NewsSoftware

![]() Accelerating Down the Road to Autonomy

Accelerating Down the Road to Autonomy

EditorialDesign

![]() DarkSky One Wants to Make the World a Darker Place

DarkSky One Wants to Make the World a Darker Place

INSIDERMaterials

![]() Can This Self-Healing Composite Make Airplane and Spacecraft Components Last...

Can This Self-Healing Composite Make Airplane and Spacecraft Components Last...

Webcasts

Defense

![]() How Sift's Unified Observability Platform Accelerates Drone Innovation

How Sift's Unified Observability Platform Accelerates Drone Innovation

Automotive

![]() E/E Architecture Redefined: Building Smarter, Safer, and Scalable...

E/E Architecture Redefined: Building Smarter, Safer, and Scalable...

Power

![]() Hydrogen Engines Are Heating Up for Heavy Duty

Hydrogen Engines Are Heating Up for Heavy Duty

Electronics & Computers

![]() Advantages of Smart Power Distribution Unit Design for Automotive...

Advantages of Smart Power Distribution Unit Design for Automotive...

Unmanned Systems

![]() Quiet, Please: NVH Improvement Opportunities in the Early Design...

Quiet, Please: NVH Improvement Opportunities in the Early Design...