Driver-Monitoring Systems to Be as Common as Seat Belts

Smart Eye, a leader in driver-monitoring safety systems, explains how driver alertness is calculated, and it involves your eyelids.

Regulators across the globe, most notably in Europe, are calling for driver-monitoring systems to become standard vehicle-safety equipment. The ability for a vehicle to combine cabin-facing cameras with software to determine if a driver is alert, drowsy or distracted could soon become either mandatory – or necessary to achieve high safety rankings. That’s good news for Smart Eye, the 20-year-old Swedish eye-tracking and artificial intelligence (AI) company.

The Gothenburg-based outfit has been working on automotive applications since about 2015. With Smart Eye systems deployed to about a half-dozen BMW models, and contracts secured with 10 other major global automakers, the company is well-positioned in the driver-detection space. Australia’s Seeing Machines is also a leading provider. “This is the next big thing when it comes to safety,” said Peter Rundberg, a technical expert at Smart Eye. “It’s going to be as big as airbags and safety belts.”

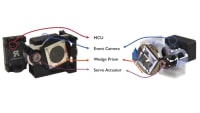

The required hardware is relatively simple. Current Smart Eye systems feature a 1-megapixel camera with 1,280-by-800-pixel resolution. It has RGB and infrared (IR) capabilities so that color images can be captured in daylight. At the same time, the active illumination of IR can render detailed images even in pitch darkness without disturbing the driver.

According to Rundberg, next-generation systems will upgrade to 2-megapixel cameras yielding about 1,600-by-1,300 pixels, thus allowing for a wider view while still preserving the necessary detail. Each Smart Eye implementation is customized for the automaker, to allow for either single or dual cameras that can be mounted in the center stack, roof liner, steering column or other locations.

Measuring blink velocity

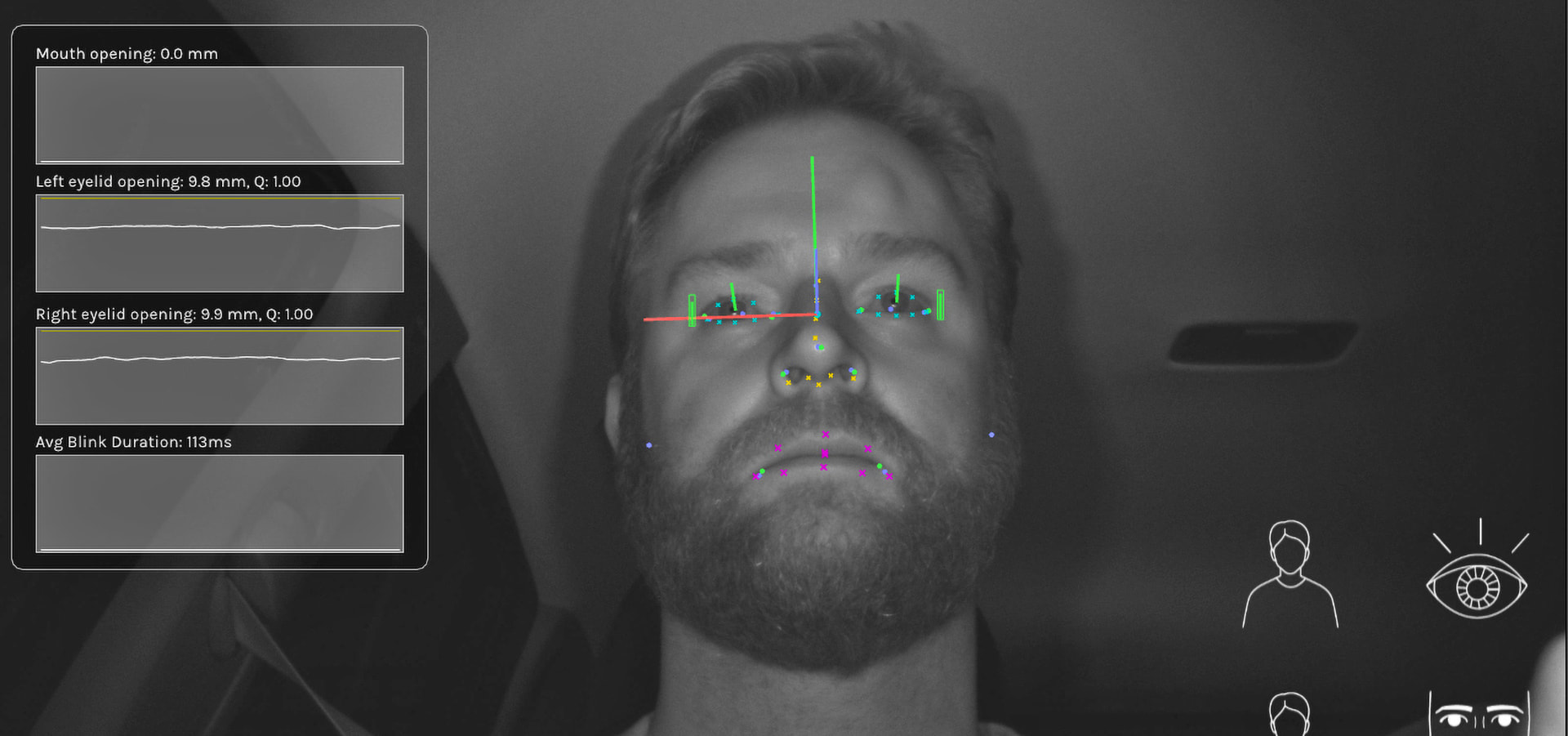

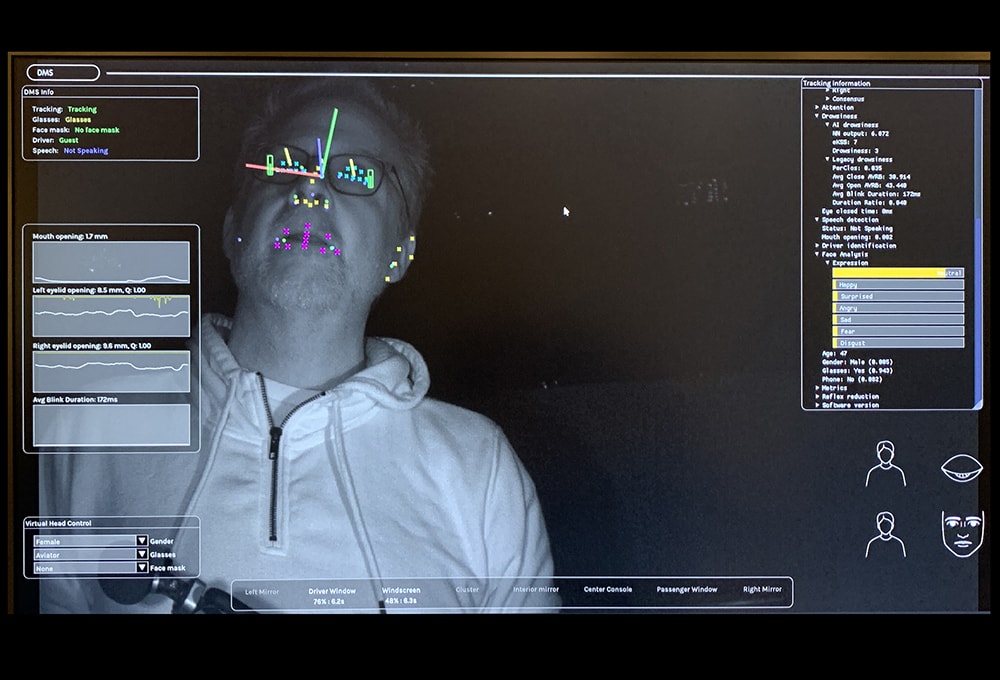

The secret sauce is the software. The system is calibrated based on the camera’s precise position to identify where the driver’s head and face are located. “The system can compute where you are in relation to the car,” said Rundberg. “It knows the lens distortion parameters.” Then a combination of classic computer vision and machine-learning work their magic. The computer vision finds key location points to map your eyes, nostrils, mouth and ears. Then the location and rotation of your head are computed over successive frames.

“We can run this on a mid-range ARM core, like from a cell phone from five years ago,” said Rundberg. “The processors cost less than $10 a pop.” The processing and data can be local unless an automaker wants to use the cloud to track a specific passenger from one vehicle to the other. The system becomes powerful when machine-learning is applied. Patterns related to factors such as head position, the duration of blinks, and the distance of mouth openings are used to infer awareness.

“The major components that go into the drowsiness algorithm are related to blinks, eyelid movements and mouth movements – more than head movement,” Rundberg said. “The blink duration gets longer, but also the slope of the velocity of the eyelid gets slower, as you get more tired.” The AI gets better at measuring alertness with more data. As Smart Eye’s algorithms improve, based on input across its growing customer base, the new code can be deployed to cars via over-the-air updates.

We took the system for a test drive at CES 2020 in Las Vegas. Measurements for eye-blink duration were captured at around 180 milliseconds with no discernible latency. As we spoke, mouth-movement data, from 1 to 30 millimeters, were captured in real-time. The camera’s frame rate of 60 frames per second meant a new image-data snapshot was output every 16 milliseconds.

A score for drowsiness, using such factors as the amplitude-to-velocity ratio of blinks, utilizes the Karolinska Sleepiness Scale (KSS) developed by Swedish researchers. Smart Eye benchmarks those numbers against legacy research for measuring drowsiness. Wider-angle images can be used to visually determine if a driver’s hands are on the wheel, if a phone is being held and if a seat belt is strapped.

The system has the uncanny ability to detect where a driver is looking. The primary use case is to ensure safety. But the data supplied by Smart Eye can be used by automakers for many value-add services. For example, if rain sensors detect moisture and the driver looks into the rear-view mirror, the rear wipers can automatically turn on. Or dashboard lighting can be dim when the driver looks ahead at the road, but then brighten for the split second when the driver glances at gauges.

Top Stories

INSIDERManufacturing & Prototyping

![]() How Airbus is Using w-DED to 3D Print Larger Titanium Airplane Parts

How Airbus is Using w-DED to 3D Print Larger Titanium Airplane Parts

NewsAutomotive

![]() Microvision Aquires Luminar, Plans Relationship Restoration, Multi-industry Push

Microvision Aquires Luminar, Plans Relationship Restoration, Multi-industry Push

INSIDERManufacturing & Prototyping

![]() A Next Generation Helmet System for Navy Pilots

A Next Generation Helmet System for Navy Pilots

ArticlesSoftware

![]() Accelerating Down the Road to Autonomy

Accelerating Down the Road to Autonomy

INSIDERManufacturing & Prototyping

![]() New Raytheon and Lockheed Martin Agreements Expand Missile Defense Production

New Raytheon and Lockheed Martin Agreements Expand Missile Defense Production

ArticlesAR/AI

![]() CES 2026: Bosch is Ready to Bring AI to Your (Likely ICE-powered) Vehicle

CES 2026: Bosch is Ready to Bring AI to Your (Likely ICE-powered) Vehicle

Webcasts

Automotive

![]() Advantages of Smart Power Distribution Unit Design for Automotive...

Advantages of Smart Power Distribution Unit Design for Automotive...

Transportation

![]() Quiet, Please: NVH Improvement Opportunities in the Early Design...

Quiet, Please: NVH Improvement Opportunities in the Early Design...

Aerospace

![]() Cooling a New Generation of Aerospace and Defense Embedded...

Cooling a New Generation of Aerospace and Defense Embedded...

Energy

![]() Battery Abuse Testing: Pushing to Failure

Battery Abuse Testing: Pushing to Failure

AR/AI

![]() A FREE Two-Day Event Dedicated to Connected Mobility

A FREE Two-Day Event Dedicated to Connected Mobility