Architectures for Cognitive Systems

Cognitive systems can perform intelligent operations such as learning and making autonomous decisions.

A need exists for small, autonomic systems in the battlefield. Autonomy allows the creation of unmanned systems to perform complex, high-risk, and/or covert operations in the battlefield without the need for constant human operation. Current computing systems are not optimized to perform intelligent operations such as environmental awareness, learning, and autonomic decisions in a size, weight, and power form factor that matches platforms envisioned for future use.

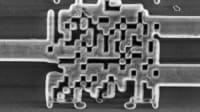

The term “cognitive operations” emphasizes lower-level operations that require massively parallel computing to perform in real time at a resolution rivaling human operations. An example is processing of visual images for object recognition. The project focused on architecture development to enable massively parallel processing, and the optimization of algorithms to utilize the new hardware architecture. The approach was to develop an architecture for processing the cognitive primitives that was not subject to limitations to parallelism that restricts Von Neumann type systems.

Efforts are underway to emulate human-brain-scale processing. There are multiple approaches that can be differentiated by the resolution level in the emulation used and the use for the output of the emulation. Of contention is whether or not emulation down to the molecular level is required for the computing system to perform and not just simulate or emulate various levels of cognitive functions. What is not in contention is the issue of the energy needed to achieve human-scale operations. Given the current rate of progress in energy efficiency, it is estimated that a human-scale system using the current processor and large supercomputer architectures will require megawatts to operate.

Human-scale systems, or brains, work around the limits of Von Neumann and Amdahl by using a concurrent, dynamic, massively parallel processing network. In this project, the processor was significantly reduced in size versus commercial processors. The cognitive operation primitive was set at the functional level. As the scale of the total system is increased by clustering nodes, responsibility for cognitive primitives will move up from the traditional “each processor is performing many serial cognitive primitive operations” to a network of nodes level. Each node will be responsible for a single cognitive primitive and be capable of performing the operation very quickly.

This network workload architecture will require a very large number of nodes to accommodate a large range of knowledge for cognitive operations. In this manner, the system parallelism is pushed closer to the level at which the cognitive primitive is performed. The network node becomes the functional primitive hardware unit for semantic operations. A semantic network node architecture was impractical in the past because there was more commercial benefit in building one very large, fast processor in a fixed area than to divide the same chip area into many smaller processors.

The features that make the architecture developed in this project useful for cognitive operations also make it useful for many other military applications. The architecture makes major progress in the trade space for size, weight, energy demand, cyber security, system reliability, processing speed, modularity, bandwidth internal to a cluster, and flexibility of operation and resource control.

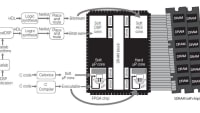

The Floating Point Unit (FPU) in the ASP was optimized for extremely energy-efficient processing of Fast Fourier Transform algorithms. This makes the architecture a very powerful system for Parallel Discrete Event Simulation used in planning tools. The modularity and ability to dynamically reallocate resources provides opportunities for several cyber-security hardware features including node-level Advanced Encryption Standard (AES) encryption.

This work was done by Thomas E. Renz of the Air Force Research Laboratory. AFRL-0191

This Brief includes a Technical Support Package (TSP).

Architectures for Cognitive Systems

(reference AFRL-0191) is currently available for download from the TSP library.

Don't have an account?

Overview

The document titled "Architectures for Cognitive Systems" is a Final Technical Report published by the Air Force Research Laboratory (AFRL) in February 2010. It details the progress and findings of a project focused on the design and development of cognitive systems, particularly through the lens of hardware and software architectures.

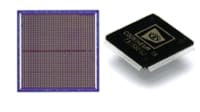

The report outlines the project's timeline, which spanned from October 2007 to September 2009, and highlights the collaborative efforts involved in the research. The primary focus of the project was to create a chip design under the Cognitive Cluster on a Chip initiative, with a scheduled tape-out for fabrication in February 2010.

A significant portion of the report is dedicated to the hardware design, emphasizing the goals of maximizing power efficiency, enhancing core-to-core connectivity, and ensuring system modularity while minimizing communication latency. The design process involved careful consideration of new features against their costs in terms of core area, instruction requirements, and security implications. The architecture chosen includes a small Application Specific Processor (ASP), associated Random Access Memory (RAM), and an Asynchronous Field Programmable Gate Array (AFPGA). The chip design features four cores tiled onto a compact 7mm by 7mm chip, utilizing IBM's 65 nm Trusted Foundry process for fabrication.

The report also discusses the memory architecture, which includes a Static Random Access Memory (SRAM) designed using the Virage Logic cell library, accommodating a ½ MB block within a specified memory area. Each core is equipped with a 32KB bank of non-cacheable memory for control and status information, crucial for managing the core's configuration and data flow.

In addition to hardware design, the report touches on software design and cognitive models, although these sections are less detailed. The results section indicates that the chip design was nearing completion by the project's end, suggesting a successful progression towards the project's objectives.

Overall, the report serves as a comprehensive overview of the methodologies, results, and future work related to cognitive system architectures, providing insights into the technical challenges and innovations in the field. It is a valuable resource for researchers and practitioners interested in cognitive computing and advanced hardware design.

Top Stories

INSIDERManufacturing & Prototyping

![]() How Airbus is Using w-DED to 3D Print Larger Titanium Airplane Parts

How Airbus is Using w-DED to 3D Print Larger Titanium Airplane Parts

INSIDERManned Systems

![]() FAA to Replace Aging Network of Ground-Based Radars

FAA to Replace Aging Network of Ground-Based Radars

NewsTransportation

![]() CES 2026: Bosch is Ready to Bring AI to Your (Likely ICE-powered) Vehicle

CES 2026: Bosch is Ready to Bring AI to Your (Likely ICE-powered) Vehicle

NewsSoftware

![]() Accelerating Down the Road to Autonomy

Accelerating Down the Road to Autonomy

EditorialDesign

![]() DarkSky One Wants to Make the World a Darker Place

DarkSky One Wants to Make the World a Darker Place

INSIDERMaterials

![]() Can This Self-Healing Composite Make Airplane and Spacecraft Components Last...

Can This Self-Healing Composite Make Airplane and Spacecraft Components Last...

Webcasts

Defense

![]() How Sift's Unified Observability Platform Accelerates Drone Innovation

How Sift's Unified Observability Platform Accelerates Drone Innovation

Automotive

![]() E/E Architecture Redefined: Building Smarter, Safer, and Scalable...

E/E Architecture Redefined: Building Smarter, Safer, and Scalable...

Power

![]() Hydrogen Engines Are Heating Up for Heavy Duty

Hydrogen Engines Are Heating Up for Heavy Duty

Electronics & Computers

![]() Advantages of Smart Power Distribution Unit Design for Automotive...

Advantages of Smart Power Distribution Unit Design for Automotive...

Unmanned Systems

![]() Quiet, Please: NVH Improvement Opportunities in the Early Design...

Quiet, Please: NVH Improvement Opportunities in the Early Design...