NRL Research Physicists Explore Fiber Optic Computing Using Distributed Feedback

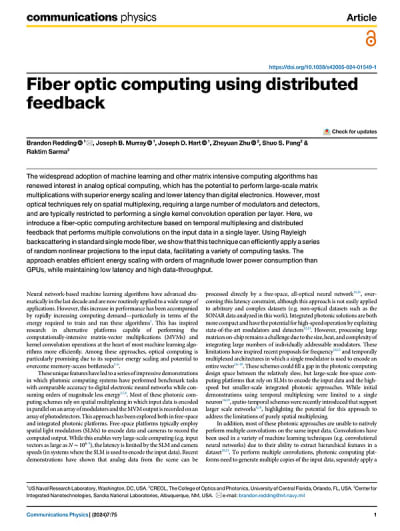

Researchers introduce a fiber-optic computing architecture based on temporal multiplexing and distributed feedback that performs multiple convolutions on the input data in a single layer.

U.S. Naval Research Laboratory (NRL) researchers have outlined a novel contribution in fiber optics computing in a paper recently published in Communications Physics Journal that brings the Navy one step closer to faster, more efficient computing technologies.

Optical computing uses the properties of light, such as its speed and ability to carry large amounts of data, to process information more efficiently than traditional electronic computers.

In collaboration with Sandia National Laboratories and the University of Central Florida, NRL is aiming to increase processing speeds, reduce energy consumption, and enable new applications in fields such as data processing, telecommunications, and artificial intelligence.

“This paper marks a significant advancement in optical computing,” said Brandon Redding, Ph.D., a Research Physicist from the NRL Optical Sciences Division. “It is the first to employ distributed feedback in optical fiber, combining temporal encoding with low-loss, partially reflective fiber. Our approach offers scalability to process multiple neurons simultaneously, along with high-speed performance and a compact, lightweight, and power-efficient design, as the entire system is fiber-coupled and does not require free-space optics.”

The Navy is rapidly adopting machine learning algorithms for a wide range of applications. Many of these applications are time and energy-sensitive. For instance, image or target recognition tasks where objects require identification in real time.

“Many of these applications involve forward deployed, often autonomous platforms with limited power availability,” Redding said. “We intend to use analog photonics, which has fundamentally different energy scaling than Von Neumann based digital electronics — to perform these machine learning tasks with lower power consumption and with lower latency. In the current paper, we performed an energy consumption analysis showing the potential for 100-1,000x lower power consumption than a GPU depending on the problem size.”

This research shows how optics can be used to conduct valuable computing tasks using passive random projections, in this case non-linear random convolutions. This is counter to how most machine learning works, which typically requires extensive training to set the weights of a neural network.

“Instead, we show that random weights can still perform useful computing tasks,” Redding said. “This is significant because we can apply random weights very efficiently in the optical domain simply by scattering light off of a rough surface, or, as we show in this paper, scattering light off non-uniformities in an optical fiber.”

In traditional, digital electronics-based computers, there wouldn’t be much advantage to doing this because every multiplication operation is just as expensive, in terms of time and energy, whether multiplying by a random number or by a value carefully selected through training.

“This implies that in the optical domain, we may want to design our neural network architectures differently to take advantage of the unique features of optics — some things are easier to do in optics and some things are harder, therefore simply porting the same neural network architecture that was optimized for digital electronics implementations may not be the ideal solution in the optical domain,” Redding said.

A more subtle feature of NRL’s fiber platform is performing convolutions, similar to a convolutional neural network (CNN), a rarity for an optical computing platform. Convolutions are very powerful for tasks like image processing, which led to the widespread use of CNNs within the Department of Defense image processing applications.

“The Navy payoff is implementing machine learning algorithms faster, reducing the delay before we arrive at an answer,” said Joseph Murray, Ph.D., a Research Physicist from the NRL Optical Sciences Division. “We are also exploring applying these algorithms directly on analog data without requiring intermediate digitization and storage, which could have a significant benefit when processing high bandwidth data that is difficult to record and analyze in real-time, such as: high-resolution image data or RF data for electronic warfare applications.”

This research was performed by Brandon Redding, Joseph Murray and a team of research physicists for the Naval Research Laboratory (Washington, DC). For more information, download the Technical Support Package below. ADTTSP-06241

This Brief includes a Technical Support Package (TSP).

Fiber Optic Computing Using Distributed Feedback

(reference ADTTSP-06241) is currently available for download from the TSP library.

Don't have an account?

Overview

The document discusses advancements in fiber optic computing using distributed feedback systems, highlighting their potential to enhance matrix-intensive computing tasks, particularly in the context of machine learning. The authors emphasize the advantages of optical computing, such as superior energy efficiency and lower latency compared to traditional digital electronics.

Key to this approach is the use of optical fibers, where the number of kernel operations can be increased by extending the fiber length or enhancing the data encoding rate. The document presents a mathematical framework for understanding the relationship between the fiber length, encoding rate, and the number of operations that can be performed. For instance, it notes that using a 5 km fiber with a 10 GHz encoding rate could enable the processing of large-scale matrices, achieving matrix-vector multiplications (MVMs) with millions of elements.

The authors also address the trade-offs involved in using temporal multiplexing, which allows for the use of a single modulator and detector but may slow down computation speed. However, the availability of high-speed optical devices helps mitigate this issue. The time required for MVM computations is analyzed, showing that increasing the encoding rate can significantly speed up processing times.

An experimental demonstration of non-linear principal component analysis (PCA) is presented, showcasing the application of this optical computing technique for dimensionality reduction and data analysis. The document explains how non-linear PCA can overcome the limitations of traditional linear PCA, making it suitable for analyzing complex datasets.

The experimental setup involved using a narrow-linewidth laser and Erbium-doped fiber amplifiers to enhance signal quality and minimize noise. The authors detail the methods used to record Rayleigh backscattered patterns and the importance of polarization diversity in capturing data accurately.

In conclusion, the document illustrates the promising capabilities of fiber optic computing in performing complex computations efficiently. By leveraging the unique properties of optical fibers and advanced modulation techniques, this research paves the way for future developments in optical computing, particularly in applications requiring high-speed data processing and analysis. The findings suggest that optical computing could play a crucial role in the evolution of machine learning and other computationally intensive fields.

Top Stories

INSIDERRF & Microwave Electronics

![]() FAA to Replace Aging Network of Ground-Based Radars

FAA to Replace Aging Network of Ground-Based Radars

PodcastsDefense

![]() A New Additive Manufacturing Accelerator for the U.S. Navy in Guam

A New Additive Manufacturing Accelerator for the U.S. Navy in Guam

NewsSoftware

![]() Rewriting the Engineer’s Playbook: What OEMs Must Do to Spin the AI Flywheel

Rewriting the Engineer’s Playbook: What OEMs Must Do to Spin the AI Flywheel

Road ReadyPower

![]() 2026 Toyota RAV4 Review: All Hybrid, All the Time

2026 Toyota RAV4 Review: All Hybrid, All the Time

INSIDERDefense

![]() F-22 Pilot Controls Drone With Tablet

F-22 Pilot Controls Drone With Tablet

INSIDERRF & Microwave Electronics

![]() L3Harris Starts Low Rate Production Of New F-16 Viper Shield

L3Harris Starts Low Rate Production Of New F-16 Viper Shield

Webcasts

Energy

![]() Hydrogen Engines Are Heating Up for Heavy Duty

Hydrogen Engines Are Heating Up for Heavy Duty

Energy

![]() SAE Automotive Podcast: Solid-State Batteries

SAE Automotive Podcast: Solid-State Batteries

Power

![]() SAE Automotive Engineering Podcast: Additive Manufacturing

SAE Automotive Engineering Podcast: Additive Manufacturing

Aerospace

![]() A New Approach to Manufacturing Machine Connectivity for the Air Force

A New Approach to Manufacturing Machine Connectivity for the Air Force

Software

![]() Optimizing Production Processes with the Virtual Twin

Optimizing Production Processes with the Virtual Twin