Simulation 'Libraries' Will Support Testing of High-Level Vehicle Autonomy

The challenges of validating autonomous vehicles designed to operate at SAE Level 4 and 5 are a major focus of driving-simulation specialist rFpro. The advent of connected autonomous vehicles (CAVs) presents the auto industry with a broad new set of unknowns that will see automakers establish "libraries" of thousands of test scenarios, said the company’s technical director, Chris Hoyle.

He presents key questions that have to be answered: “How will we know that a CAV is safe to operate under all conditions? How can we ensure that testing is sufficiently comprehensive and rigorous, yet timely and cost-effective? Even before we reach the validation stage, is there a way to accelerate the development of autonomous vehicles without the risks associated with exposing them to public road users?”

Hoyle said he is routinely exposed to this debate and believes that simulation can provide greater scope and shorter timelines than physical testing—but that it must be applied correctly.

New simulation platform

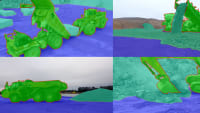

The company has launched what it claims to be the world’s first commercially available platform to train and develop autonomous vehicles in simulation and test their systems in “every scenario imaginable.” A key aspect of the platform is claimed to be the level of simulation accuracy achieved replicating the real world. A three-year program has seen the company build a library of real roads via high-precision scanning technology (see previous article). Users have control of a wide range of variables, from weather to pedestrians. The technology has been adopted by two large OEMs, three autonomous-vehicle developers and a driverless motorsport series. For commercial confidential reasons, rFpro is unable to give further details.

By using a cluster of computers 24/7, manufacturers can achieve millions of simulated miles of testing every month. Explains Hoyle: “Human drivers average one fatality in 100 million miles driven (source: www-fars.nhtsa.dot.gov/Main/index.aspx ), but we cannot realistically attempt to accumulate this sort of mileage with a CAV before declaring a test to be complete. The reason a human driver scores so well is because much of the distance is uneventful; by eliminating this ‘dead’ mileage and subjecting—via simulation—the CAV to a “once-in-a-1000 year” event every few seconds, we can massively compress the timescale. Vehicle manufacturers will build libraries of thousands of simulated test scenarios, which autonomous models will have to successfully pass before they will be considered ready for validation.”

Any failed experiment typically results in several more standard tests being added to the library of simulated scenarios, each of which must be reliably passed with consistency.

Hoyle said the libraries of tests, run continuously using a regression process, would ensure that any new developments to an autonomous model do not break existing functionality. “To enable this, rFpro not only scales across a cluster of machines to run multiple experiments in parallel, but it also allows each experiment to scale across multiple CPUs and GPUs to cope with the complexity of autonomous models fed by multiple camera, LiDAR and radar sensors.”

Standardizing simulation

Testing so intensively will take time to achieve required results. But Hoyle anticipates that over the next five years, the rate at which new test scenarios are identified will fall to the point at which it can be statistically proven to be below the error rate for human drivers. At that stage, the physical validation and verification process could begin.

He expects—perhaps hopefully—that the auto industry will develop a global standardized library of test scenarios which, once the model validates them, will then move forward to the next stage: a statistical sample of those tests will be selected and expanded for physical testing in the real world.

But along comes another question: how to build a library of tests that is sufficiently comprehensive and rigorous. Ironically, Hoyle stated that humans are very good at testing autonomous vehicles: “Humans are random, unpredictable, never the same twice; we make mistakes and our performance changes with mood and fatigue level.” At present, up to 50 human drivers can be added into a single simulated experiment, piloting vehicles with the autonomous model tested in densely-populated, simulated urban environments surrounded by other road users and pedestrians, without any risk of death or injury.

By late this year, rFpro anticipates this will be scaled up to 250 human test drivers entering a single experiment, shared by one or more CAVs.

Efficient development of artificial-intelligence (AI) systems requires the ability to learn from failures and improve the functionality before re-testing, stressed Hoyle, saying edge cases (where one parameter exceeds system limits) or corner cases (where a combination of two or more parameters exceeds system limits) frequently will be encountered and fed back into the system, increasing its knowledge base.

Training datasets can be established to demonstrate correct behavior for failed experiments, each comprising all the data that is fed to sensor models for virtual cameras, LiDAR and radar. Every frame of training data is associated with “ground-truth” data, comprising semantic segmentation, instance segmentation, optical flow, depth and labelled object data: “In that way, through supervised learning, the models improve and adapt to each new failure mode,” Hoyle said.

But through all this, a vital factor must always be remembered and appreciated, he added: “Humans are the best source of unbiased inputs because they never drive in an identical manner, even when repeating a journey on the same road in the same weather conditions. Also, they can identify behavior which is unusual, irritating or unexpected and likely to promote adverse reaction from other road users!”

Top Stories

INSIDERRF & Microwave Electronics

![]() FAA to Replace Aging Network of Ground-Based Radars

FAA to Replace Aging Network of Ground-Based Radars

PodcastsDefense

![]() A New Additive Manufacturing Accelerator for the U.S. Navy in Guam

A New Additive Manufacturing Accelerator for the U.S. Navy in Guam

NewsSoftware

![]() Rewriting the Engineer’s Playbook: What OEMs Must Do to Spin the AI Flywheel

Rewriting the Engineer’s Playbook: What OEMs Must Do to Spin the AI Flywheel

Road ReadyPower

![]() 2026 Toyota RAV4 Review: All Hybrid, All the Time

2026 Toyota RAV4 Review: All Hybrid, All the Time

INSIDERDefense

![]() F-22 Pilot Controls Drone With Tablet

F-22 Pilot Controls Drone With Tablet

INSIDERRF & Microwave Electronics

![]() L3Harris Starts Low Rate Production Of New F-16 Viper Shield

L3Harris Starts Low Rate Production Of New F-16 Viper Shield

Webcasts

Energy

![]() Hydrogen Engines Are Heating Up for Heavy Duty

Hydrogen Engines Are Heating Up for Heavy Duty

Energy

![]() SAE Automotive Podcast: Solid-State Batteries

SAE Automotive Podcast: Solid-State Batteries

Power

![]() SAE Automotive Engineering Podcast: Additive Manufacturing

SAE Automotive Engineering Podcast: Additive Manufacturing

Aerospace

![]() A New Approach to Manufacturing Machine Connectivity for the Air Force

A New Approach to Manufacturing Machine Connectivity for the Air Force

Software

![]() Optimizing Production Processes with the Virtual Twin

Optimizing Production Processes with the Virtual Twin