A Comparative Evaluation of the Detection and Tracking Capability Between Novel Event-Based and Conventional Frame-Based Sensors

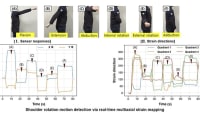

This research establishes a fundamental understanding of the characteristics of event-based sensors and applies it to the development of a detection and tracking algorithm.

The detection and tracking of moving objects in free space is an area of computer vision that has benefited greatly from years of research and development. To date, there are many different algorithms available with new and improved revisions being developed on a regular basis. With such maturity, it is not surprising that such algorithms provide very effective solutions in a wide range of applications. There are, however, select scenarios in which traditional detection and tracking algorithms break down. In many cases it is not attributable to the algorithm, but rather the fundamental operation of a frame-based sensor.

Despite extensive research, traditional frame-based algorithms remain tied to predefined frame rates that lead to image artifacts such as motion blur and sensor characteristics such as low dynamic range, speed limitations and the requirement to process large data files often filled with copious amounts of redundant data.

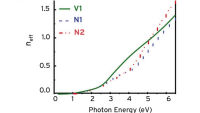

Event-based sensors, also known as silicon retinas or neuromorphic sensors, are revolutionary optical sensors that operate fundamentally differently to traditional frame-based sensors and offer the potential of a novel solution to these challenges. Inspired by the functionality of a biological retina, these sensors are driven by changes in low-light intensity and not by artificial frame rates and control signals. Within these sensors each pixel behaves both asynchronously and independently, enabling events to be generated with microsecond resolution in response to localized optical changes as they occur.

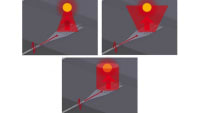

As noted, event-based sensors specifically aim to mimic the biological retina and subsequent vision processing of the brain. While the retina is the photosensitive tissue of the eye, for it to work properly the entire eyeball is needed. Figure (a) shows a schematic crosssection of a typical eye. Light entering the eye passes through the cornea and into the first of two humors. The aqueous humor is a clear mass that connects the cornea with the lens, helping to maintain the shape of the cornea. Between the aqueous humor and lens lies the iris, a colored ring of muscle fibers. The iris forms an adjustable aperture, called the pupil, which is actively adjusted to ensure a relatively constant amount of light enters the eye at all times.

While the cornea has a fixed curvature, the shape of the lens can be actively moulded to adjust the eye’s focal length as needed. On the back side of the lens is the vitreous humor that again helps to maintain the shape of the eye. Light entering the pupil then forms an inverted image on the retina. The retina contains the photosensitive rods and cones and is a relatively smooth, curved layer with two distinct points; the fovea and optic disc. Densely populated with cone cells, the fovea is positioned directly opposite the lens and is largely responsible for color vision. The optic disc, a blind spot in the eye, is where the axons of the ganglion cells leave the eye to form the optic nerve.

From the photoreceptors, neural responses pass through a series of linking cells, called bipolar, horizontal and amacrin cells (see Figure (b)). These cells combine and compare the responses from individual photoreceptors before transmitting the signals to the retinal ganglia cells. The linkage between neighboring cells provides a mechanism for spatial and temporal filtering, facilitating relative, rather than absolute, judgment of intensity, emphasizing edges and temporal changes in the visual field of view. It is the network of photoreceptors, horizontal cells, bipolar cells, amacrin cells and ganglion cells that can discriminate between useful information to be passed to the brain, and redundant information that is best discarded immediately.

This work was done by James P. Boettiger of the RAAF for the Air Force Institute of Technology. For more information, download the Technical Support Package below. AFRL-0299

This Brief includes a Technical Support Package (TSP).

A Comparative Evaluation of the Detection and Tracking Capability Between Novel Event-Based and Conventional Frame-Based Sensors

(reference AFRL-0299) is currently available for download from the TSP library.

Don't have an account?

Overview

The document is a Master's thesis by Flight Lieutenant James P. Boettiger, conducted at the Air Force Institute of Technology, focusing on the comparative evaluation of detection and tracking capabilities between novel event-based sensors and conventional frame-based sensors. The research spans from September 2018 to March 2020 and aims to address the limitations of traditional frame-based technology, which suffers from issues such as motion blur, low dynamic range, speed limitations, and high data storage requirements.

Event-based sensors operate differently from frame-based sensors; they respond asynchronously to changes in light intensity, allowing for unique advantages such as low power consumption, reduced bandwidth requirements, high temporal resolution, and high dynamic range. This asynchronous operation enables event-based sensors to capture rapid movements without the blurring that often affects frame-based systems.

The thesis presents a comparative assessment of object detection and tracking capabilities using both sensor types. A basic frame-based algorithm was developed and tested against two different event-based algorithms. The first approach involved parsing event-based pseudo-frames through standard frame-based algorithms, while the second constructed target tracks directly from filtered events. The findings indicate that while effective detection and tracking were demonstrated, the research did not identify an ideal event-based solution to completely replace existing frame-based technology. However, it highlighted the significant potential and value in further exploring event-based sensor technology.

The document also includes sections on the background and literature review, discussing biological inspirations for sensor design, the types of event-based sensors, and the advantages of event-based over frame-based sensors. The research underscores the importance of continued investigation into event-based technology, suggesting that its disruptive nature could lead to substantial advancements in sensor capabilities.

Overall, the thesis contributes to the understanding of how event-based sensors can enhance detection and tracking in various applications, particularly in defense contexts, and encourages further research to fully realize their potential. The distribution statement indicates that the document is approved for public release, ensuring that the findings can be shared with a broader audience interested in sensor technology advancements.

Top Stories

INSIDERManufacturing & Prototyping

![]() How Airbus is Using w-DED to 3D Print Larger Titanium Airplane Parts

How Airbus is Using w-DED to 3D Print Larger Titanium Airplane Parts

INSIDERManned Systems

![]() FAA to Replace Aging Network of Ground-Based Radars

FAA to Replace Aging Network of Ground-Based Radars

NewsTransportation

![]() CES 2026: Bosch is Ready to Bring AI to Your (Likely ICE-powered) Vehicle

CES 2026: Bosch is Ready to Bring AI to Your (Likely ICE-powered) Vehicle

NewsSoftware

![]() Accelerating Down the Road to Autonomy

Accelerating Down the Road to Autonomy

EditorialDesign

![]() DarkSky One Wants to Make the World a Darker Place

DarkSky One Wants to Make the World a Darker Place

INSIDERMaterials

![]() Can This Self-Healing Composite Make Airplane and Spacecraft Components Last...

Can This Self-Healing Composite Make Airplane and Spacecraft Components Last...

Webcasts

Defense

![]() How Sift's Unified Observability Platform Accelerates Drone Innovation

How Sift's Unified Observability Platform Accelerates Drone Innovation

Automotive

![]() E/E Architecture Redefined: Building Smarter, Safer, and Scalable...

E/E Architecture Redefined: Building Smarter, Safer, and Scalable...

Power

![]() Hydrogen Engines Are Heating Up for Heavy Duty

Hydrogen Engines Are Heating Up for Heavy Duty

Electronics & Computers

![]() Advantages of Smart Power Distribution Unit Design for Automotive...

Advantages of Smart Power Distribution Unit Design for Automotive...

Unmanned Systems

![]() Quiet, Please: NVH Improvement Opportunities in the Early Design...

Quiet, Please: NVH Improvement Opportunities in the Early Design...