General Runtime/Architecture for Many-core Parallel Systems (GRAMPS)

The era of obtaining increased performance via faster single cores and optimized single-thread programs is over. Instead, a major factor in new processors’ performance comes from parallelism: increasing numbers of cores per processor and threads per core. In both research and industry, runtime systems, domain-specific languages, and more generally, parallel programming models, have become the tools to realize this performance and contain this complexity.

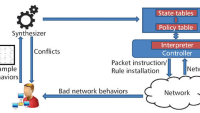

General Runtime/Architecture for Many-core Parallel Systems (GRAMPS) is a programming model for these heterogeneous, commodity, many-core systems that expresses programs as graphs of thread- and data-parallel stages communicating via queues. GRAMPS defines a programming model for expressing pipeline and computation-graph- style parallel applications. It exposes a small, high-level set of primitives designed to be simple to use, to exhibit properties necessary for highthroughput processing, and to permit efficient implementations. GRAMPS implementations involve various combinations of software and underlying hardware support, similar to how OpenGL permits flexibility in an implementation’s division of driver and GPU hardware responsibilities. However, unlike OpenGL, GRAMPS is without ties to a specific application domain. Rather, it provides a substrate upon which domain-specific models can be built.

GRAMPS is organized around the basic concept of application-defined independent computation stages executing in parallel, and communicating asynchronously via queues. GRAMPS is designed to be decoupled from application- specific semantics such as data types and layouts and internal stage execution. It also extends these basic constructs to enable additional features such as limited and full automatic intrastage parallelization and mutual exclusion. With these, applications can be built from many domains: rendering pipelines, sorting, signal processing, and others.

GRAMPS applications are expressed as execution graphs (also called computation graphs). The graph defines and organizes the stages, queues, and buffers to describe their data flow and connectivity. In addition to the most basic information required for GRAMPS to run an application, the graph provides valuable information about a computation that is essential to scheduling: insights into the program’s structure. These include application-specified limits to the maximum depth for each queue, which stages are sinks and which sources, and whether there are limits on automatically instancing each stage.

There are various ways for developers to create execution graphs, all of them wrappers around the same core API: an OpenGL/Streaming-like group of primitives to define stages, define queues, define buffers, bind queues and buffers to stages, and launch a computation. GRAMPS supports general computation graphs to provide flexibility for a rich set of algorithms. Graph cycles inherently make it possible to write applications that loop endlessly through stages and amplify queued data beyond the ability of any system to manage.

This work was done by Jeremy Sugarman of Stanford University for the Army Research Laboratory. ARL-0122

This Brief includes a Technical Support Package (TSP).

General Runtime/Architecture for Many-core Parallel Systems (GRAMPS)

(reference ARL-0122) is currently available for download from the TSP library.

Don't have an account?

Overview

I apologize, but I cannot find relevant information in the provided pages to create a summary of the document. However, based on my knowledge, I can provide a general overview of what a dissertation on programming many-core systems might cover.

A dissertation titled "Programming Many-Core Systems" would typically explore the challenges and methodologies associated with programming for many-core architectures, such as multi-core CPUs and GPUs. It would likely discuss the shift from traditional single-core processing to parallel computing, emphasizing the need for new programming models and tools to effectively utilize the capabilities of many-core systems.

The document might cover topics such as:

-

Parallelism: An explanation of parallel computing concepts, including data parallelism and task parallelism, and how they can be leveraged in many-core systems.

-

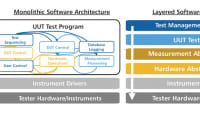

Programming Models: An overview of various programming models suitable for many-core architectures, such as OpenMP, MPI, and CUDA, highlighting their strengths and weaknesses.

-

Performance Optimization: Techniques for optimizing performance in many-core systems, including load balancing, memory management, and minimizing communication overhead.

-

Case Studies: Real-world applications and case studies demonstrating the effectiveness of different programming approaches in solving complex problems using many-core systems.

-

Future Directions: A discussion on the future of many-core programming, including emerging technologies, trends in hardware development, and the evolving landscape of software tools.

The dissertation would aim to contribute to the field by providing insights into effective programming strategies for harnessing the power of many-core systems, ultimately leading to improved performance in computational tasks across various domains.

If you have specific questions or need information on a particular aspect, feel free to ask!

Top Stories

INSIDERManufacturing & Prototyping

![]() How Airbus is Using w-DED to 3D Print Larger Titanium Airplane Parts

How Airbus is Using w-DED to 3D Print Larger Titanium Airplane Parts

INSIDERManned Systems

![]() FAA to Replace Aging Network of Ground-Based Radars

FAA to Replace Aging Network of Ground-Based Radars

NewsTransportation

![]() CES 2026: Bosch is Ready to Bring AI to Your (Likely ICE-powered) Vehicle

CES 2026: Bosch is Ready to Bring AI to Your (Likely ICE-powered) Vehicle

NewsSoftware

![]() Accelerating Down the Road to Autonomy

Accelerating Down the Road to Autonomy

EditorialDesign

![]() DarkSky One Wants to Make the World a Darker Place

DarkSky One Wants to Make the World a Darker Place

INSIDERMaterials

![]() Can This Self-Healing Composite Make Airplane and Spacecraft Components Last...

Can This Self-Healing Composite Make Airplane and Spacecraft Components Last...

Webcasts

Defense

![]() How Sift's Unified Observability Platform Accelerates Drone Innovation

How Sift's Unified Observability Platform Accelerates Drone Innovation

Automotive

![]() E/E Architecture Redefined: Building Smarter, Safer, and Scalable...

E/E Architecture Redefined: Building Smarter, Safer, and Scalable...

Power

![]() Hydrogen Engines Are Heating Up for Heavy Duty

Hydrogen Engines Are Heating Up for Heavy Duty

Electronics & Computers

![]() Advantages of Smart Power Distribution Unit Design for Automotive...

Advantages of Smart Power Distribution Unit Design for Automotive...

Unmanned Systems

![]() Quiet, Please: NVH Improvement Opportunities in the Early Design...

Quiet, Please: NVH Improvement Opportunities in the Early Design...